Helix/Hardware: Difference between revisions

S Richling (talk | contribs) |

S Richling (talk | contribs) Tag: Manual revert |

||

| (41 intermediate revisions by 2 users not shown) | |||

| Line 8: | Line 8: | ||

* Operating system: RedHat |

* Operating system: RedHat |

||

* Queuing system: Slurm |

* Queuing system: [[Helix/Slurm | Slurm]] |

||

* Access to application software: [[Software_Modules|Environment Modules]] |

* Access to application software: [[Software_Modules|Environment Modules]] |

||

== Compute Nodes == |

== Compute Nodes == |

||

The cluster is equipped with the following CPU and GPU nodes. |

|||

=== AMD Nodes === |

|||

{| class="wikitable" style="width:80%;" |

|||

Common features of all AMD nodes: |

|||

* Processors: 2 x AMD Milan EPYC 7513 |

|||

* Processor Frequency: 2.6 GHz |

|||

* Number of Cores per Node: 64 |

|||

* Local disk: None |

|||

{| class="wikitable" style="width:70%;" |

|||

|- |

|- |

||

! style="width:20%" | |

! style="width:20%" | |

||

! style="width:40%" colspan="2" style="text-align:center" | CPU Nodes |

! style="width:40%" colspan="2" style="text-align:center" | CPU Nodes |

||

! style="width:40%" colspan=" |

! style="width:40%" colspan="4" style="text-align:center" | GPU Nodes |

||

|- |

|- |

||

!scope="column"| Node Type |

!scope="column"; style="background-color:#A2C4EB" | Node Type |

||

!style="text-align:left;"| cpu |

|||

| cpu |

|||

!style="text-align:left;"| fat |

|||

| fat |

|||

!style="text-align:left;"| gpu4 |

|||

!style="text-align:left;"| gpu4 |

|||

| gpu8 |

|||

!style="text-align:left;"| gpu8 |

|||

!style="text-align:left;"| gpu8 |

|||

|- |

|- |

||

!scope="column"| Quantity |

!scope="column"| Quantity |

||

| Line 38: | Line 34: | ||

| 29 |

| 29 |

||

| 26 |

| 26 |

||

| 4 |

|||

| 3 |

| 3 |

||

|- |

|||

!scope="column" | Processors |

|||

| 2 x AMD EPYC 7513 |

|||

| 2 x AMD EPYC 7513 |

|||

| 2 x AMD EPYC 7513 |

|||

| 2 x AMD EPYC 7513 |

|||

| 2 x AMD EPYC 7513 |

|||

| 2 x AMD EPYC 9334 |

|||

|- |

|||

!scope="column" | Processor Frequency (GHz) |

|||

| 2.6 |

|||

| 2.6 |

|||

| 2.6 |

|||

| 2.6 |

|||

| 2.6 |

|||

| 2.7 |

|||

|- |

|||

!scope="column"; style="background-color:#A2C4EB" | Number of Cores per Node |

|||

!style="text-align:left;"| 64 |

|||

!style="text-align:left;"| 64 |

|||

!style="text-align:left;"| 64 |

|||

!style="text-align:left;"| 64 |

|||

!style="text-align:left;"| 64 |

|||

!style="text-align:left;"| 64 |

|||

|- |

|- |

||

!scope="column" | Installed Working Memory (GB) |

!scope="column" | Installed Working Memory (GB) |

||

| Line 46: | Line 67: | ||

| 256 |

| 256 |

||

| 2048 |

| 2048 |

||

| 2304 |

|||

|- |

|- |

||

!scope="column"; style="background-color: |

!scope="column"; style="background-color:#A2C4EB" | Available Memory for Jobs (GB) |

||

!style="text-align:left;"| 236 |

|||

| 236 |

|||

!style="text-align:left;"| 2000 |

|||

| 2010 |

|||

!style="text-align:left;"| 236 |

|||

| 236 |

|||

!style="text-align:left;"| 236 |

|||

| 236 |

|||

!style="text-align:left;"| 2000 |

|||

| 2010 |

|||

!style="text-align:left;"| 2200 |

|||

|- |

|- |

||

!scope="column" | Interconnect |

!scope="column" | Interconnect |

||

| Line 59: | Line 82: | ||

| 2x HDR100 |

| 2x HDR100 |

||

| 2x HDR200 |

| 2x HDR200 |

||

| 4x HDR200 |

|||

| 4x HDR200 |

| 4x HDR200 |

||

|- |

|- |

||

| Line 67: | Line 91: | ||

| 4x [https://www.nvidia.com/en-us/data-center/a100/ Nvidia A100] (40 GB) |

| 4x [https://www.nvidia.com/en-us/data-center/a100/ Nvidia A100] (40 GB) |

||

| 8x [https://www.nvidia.com/en-us/data-center/a100/ Nvidia A100] (80 GB) |

| 8x [https://www.nvidia.com/en-us/data-center/a100/ Nvidia A100] (80 GB) |

||

| 8x [https://www.nvidia.com/en-us/data-center/h200/ Nvidia H200] (141 GB) |

|||

|- |

|- |

||

!scope="column"; style="background-color: |

!scope="column"; style="background-color:#A2C4EB" | Number of GPUs |

||

!style="text-align:left;"| - |

|||

| - |

|||

!style="text-align:left;"| - |

|||

| - |

|||

!style="text-align:left;"| 4 |

|||

| 4 |

|||

!style="text-align:left;"| 4 |

|||

| 4 |

|||

!style="text-align:left;"| 8 |

|||

| 8 |

|||

!style="text-align:left;"| 8 |

|||

|- |

|- |

||

!scope="column"; style="background-color: |

!scope="column"; style="background-color:#A2C4EB" | GPU Type |

||

!style="text-align:left;"| - |

|||

| - |

|||

!style="text-align:left;"| - |

|||

| - |

|||

!style="text-align:left;"| A40 |

|||

| A40 |

|||

!style="text-align:left;"| A100 |

|||

| A100 |

|||

!style="text-align:left;"| A100 |

|||

| A100 |

|||

!style="text-align:left;"| H200 |

|||

|} |

|||

=== Intel Nodes === |

|||

Some Intel nodes (Skylake and Cascade Lake) from the predecessor system will be integrated. Details will follow. |

|||

<!-- Intel nodes tabel (draft) |

|||

Common features of all Intel nodes: |

|||

* Interconnect: 1x EDR |

|||

{| class="wikitable" |

|||

|- |

|- |

||

!scope="column"; style="background-color:#A2C4EB" | GPU Memory per GPU (GB) |

|||

! style="width:12%"| |

|||

! |

!style="text-align:left;"| - |

||

! |

!style="text-align:left;"| - |

||

!style="text-align:left;"| 48 |

|||

!style="text-align:left;"| 40 |

|||

!style="text-align:left;"| 80 |

|||

!style="text-align:left;"| 141 |

|||

|- |

|- |

||

!scope="column"| |

!scope="column"; style="background-color:#A2C4EB" | GPU Feature |

||

!style="text-align:left;"| - |

|||

!style="text-align:left;"| - |

|||

!style="text-align:left;"| - |

|||

!style="text-align:left;"| fp64 |

|||

!style="text-align:left;"| fp64 |

|||

|- |

|||

!style="text-align:left;"| fp64 |

|||

!scope="column"| Architecture |

|||

|} |

|||

| colspan="1" style="text-align:center" | Skylake |

|||

| colspan="1" style="text-align:center" | Cascade Lake |

|||

| colspan="4" style="text-align:center" | Skylake |

|||

| colspan="4" style="text-align:center" | Cascade Lake |

|||

|- |

|||

!scope="column"| Quantity |

|||

| 24 |

|||

| 5 |

|||

| 1 |

|||

| 1 |

|||

| 2 |

|||

| 3 |

|||

| 3 |

|||

| 3 |

|||

| 3 |

|||

| 1 |

|||

|- |

|||

!scope="column" | Processors |

|||

| 2 x Intel Xeon Gold 6130 |

|||

| 2 x Intel Xeon Gold 6230 |

|||

| 2 x Intel Xeon Gold 6130 |

|||

| 2 x Intel Xeon Gold 6130 |

|||

| 2 x Intel Xeon Gold 6130 |

|||

| 2 x Intel Xeon Gold 6130 |

|||

| 2 x Intel Xeon Gold 6230 |

|||

| 2 x Intel Xeon Gold 6230 |

|||

| 2 x Intel Xeon Gold 6240R |

|||

| 2 x Intel Xeon Gold 6240R |

|||

|- |

|||

!scope="column" | Processor Frequency (GHz) |

|||

| 2.1 |

|||

| 2.2 |

|||

| 2.1 |

|||

| 2.1 |

|||

| 2.1 |

|||

| 2.1 |

|||

| 2.1 |

|||

| 2.1 |

|||

| 2.4 |

|||

| 2.4 |

|||

|- |

|||

!scope="column" | Number of Cores |

|||

| 32 |

|||

| 40 |

|||

| 32 |

|||

| 32 |

|||

| 32 |

|||

| 32 |

|||

| 40 |

|||

| 40 |

|||

| 48 |

|||

| 48 |

|||

|- |

|||

!scope="column" | Working Memory (GB) |

|||

| 192 |

|||

| 384 |

|||

| 192 |

|||

| 384 |

|||

| 384 |

|||

| 384 |

|||

| 384 |

|||

| 384 |

|||

| 384 |

|||

| 384 |

|||

|- |

|||

!scope="column" | Local Disk (GB) |

|||

| 512 (SSD) |

|||

| 480 (SSD) |

|||

| 512 (SSD) |

|||

| 512 (SSD) |

|||

| 512 (SSD) |

|||

| 512 (SSD) |

|||

| 480 (SSD) |

|||

| 480 (SSD) |

|||

| 480 (SSD) |

|||

| 480 (SSD) |

|||

|- |

|||

!scope="column" | Coprocessors |

|||

| - |

|||

| - |

|||

| 4 x [https://www.nvidia.com/en-us/titan/titan-xp/ Nvidia Titan Xp (12 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/data-center/tesla-v100/ Nvidia Tesla V100 (16 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/geforce/products/10series/geforce-gtx-1080-ti/ Nvidia GeForce GTX 1080Ti (11 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/geforce/graphics-cards/rtx-2080-ti/ Nvidia GeForce RTX 2080Ti (11 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/data-center/tesla-v100/ Nvidia Tesla V100 (16 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/data-center/tesla-v100/ Nvidia Tesla V100s (32 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/geforce/graphics-cards/30-series/rtx-3090 Nvidia GeForce RTX 3090 (24 GB)] |

|||

| 4 x [https://www.nvidia.com/de-de/design-visualization/quadro/rtx-8000/ Nvidia Quadro RTX 8000 (48 GB)] |

|||

The lines marked in blue are in particular relevant for [https://wiki.bwhpc.de/e/Helix/Slurm Slurm jobs]. |

|||

|- |

|||

! scope="column" | Number of GPUs |

|||

| - |

|||

| - |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

| 4 |

|||

|- |

|||

! scope="column" | GPU Type |

|||

| - |

|||

| - |

|||

| TITAN |

|||

| V100 |

|||

| GTX1080 |

|||

| RTX2080 |

|||

| V100 |

|||

| V100S |

|||

| RTX3090 |

|||

| RTX8000 |

|||

|} |

|||

--> |

|||

== Storage Architecture == |

== Storage Architecture == |

||

There is one storage system providing a large parallel file system based on IBM |

There is one storage system providing a large parallel file system based on IBM Storage Scale for $HOME, for workspaces, and for temporary job data. The compute nodes do not have local disks. |

||

== Network == |

== Network == |

||

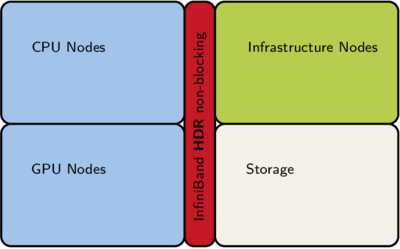

The components of the cluster are connected via two independent networks, a management network (Ethernet and IPMI) and an Infiniband fabric for MPI communication and storage access. |

The components of the cluster are connected via two independent networks, a management network (Ethernet and IPMI) and an Infiniband fabric for MPI communication and storage access. |

||

The Infiniband backbone is a fully non-blocking fabric with 200 Gb/s data speed. The compute nodes are connected with different data speeds according to the node configuration. |

The Infiniband backbone is a fully non-blocking fabric with 200 Gb/s data speed. The compute nodes are connected with different data speeds according to the node configuration. |

||

The term HDR100 stands for 100 Gb/s and HDR200 for 200 Gb/s. |

|||

Latest revision as of 08:51, 24 April 2025

System Architecture

The bwForCluster Helix is a high performance supercomputer with high speed interconnect. The system consists of compute nodes (CPU and GPU nodes), some infrastructure nodes for login and administration and a storage system. All components are connected via a fast Infiniband network. The login nodes are also connected to the Internet via Baden Württemberg's extended LAN BelWü.

Operating System and Software

- Operating system: RedHat

- Queuing system: Slurm

- Access to application software: Environment Modules

Compute Nodes

The cluster is equipped with the following CPU and GPU nodes.

| CPU Nodes | GPU Nodes | |||||

|---|---|---|---|---|---|---|

| Node Type | cpu | fat | gpu4 | gpu4 | gpu8 | gpu8 |

| Quantity | 355 | 15 | 29 | 26 | 4 | 3 |

| Processors | 2 x AMD EPYC 7513 | 2 x AMD EPYC 7513 | 2 x AMD EPYC 7513 | 2 x AMD EPYC 7513 | 2 x AMD EPYC 7513 | 2 x AMD EPYC 9334 |

| Processor Frequency (GHz) | 2.6 | 2.6 | 2.6 | 2.6 | 2.6 | 2.7 |

| Number of Cores per Node | 64 | 64 | 64 | 64 | 64 | 64 |

| Installed Working Memory (GB) | 256 | 2048 | 256 | 256 | 2048 | 2304 |

| Available Memory for Jobs (GB) | 236 | 2000 | 236 | 236 | 2000 | 2200 |

| Interconnect | 1x HDR100 | 1x HDR100 | 2x HDR100 | 2x HDR200 | 4x HDR200 | 4x HDR200 |

| Coprocessors | - | - | 4x Nvidia A40 (48 GB) | 4x Nvidia A100 (40 GB) | 8x Nvidia A100 (80 GB) | 8x Nvidia H200 (141 GB) |

| Number of GPUs | - | - | 4 | 4 | 8 | 8 |

| GPU Type | - | - | A40 | A100 | A100 | H200 |

| GPU Memory per GPU (GB) | - | - | 48 | 40 | 80 | 141 |

| GPU Feature | - | - | - | fp64 | fp64 | fp64 |

The lines marked in blue are in particular relevant for Slurm jobs.

Storage Architecture

There is one storage system providing a large parallel file system based on IBM Storage Scale for $HOME, for workspaces, and for temporary job data. The compute nodes do not have local disks.

Network

The components of the cluster are connected via two independent networks, a management network (Ethernet and IPMI) and an Infiniband fabric for MPI communication and storage access. The Infiniband backbone is a fully non-blocking fabric with 200 Gb/s data speed. The compute nodes are connected with different data speeds according to the node configuration. The term HDR100 stands for 100 Gb/s and HDR200 for 200 Gb/s.