Helix/Hardware

System Architecture

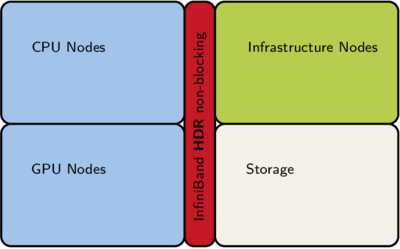

The bwForCluster Helix is a high performance supercomputer with high speed interconnect. The system consists of compute nodes (CPU and GPU nodes), some infrastructure nodes for login and administration and a storage system. All components are connected via a fast Infiniband network. The login nodes are also connected to the Internet via Baden Württemberg's extended LAN BelWü.

Operating System and Software

- Operating system: RedHat

- Queuing system: Slurm

- Access to application software: Environment Modules

Compute Nodes

AMD Nodes

Common features of all AMD nodes:

- Processors: 2 x AMD Milan EPYC 7513

- Processor Frequency: 2.6 GHz

- Number of Cores per Node: 64

- Local disk: None

| CPU Nodes | GPU Nodes | ||||

|---|---|---|---|---|---|

| Node Type | cpu | fat | gpu4 | gpu4 | gpu8 |

| Quantity | 355 | 15 | 29 | 26 | 4 |

| Installed Working Memory (GB) | 2048 | 256 | 256 | 2048 | |

| Available Memory for Jobs (GB) | 236 | 2010 | 236 | 236 | 2010 |

| Interconnect | 1x HDR100 | 1x HDR100 | 2x HDR100 | 2x HDR200 | 4x HDR200 |

| Coprocessors | - | - | 4x Nvidia A40 (48 GB) | 4x Nvidia A100 (40 GB) | 8x Nvidia A100 (80 GB) |

| Number of GPUs | - | - | 4 | 4 | 8 |

| GPU Type | - | - | A40 | A100 | A100 |

The lines marked in blue are in particular relevant for Slurm jobs.

Storage Architecture

There is one storage system providing a large parallel file system based on IBM Spectrum Scale for $HOME, for workspaces, and for temporary job data.

Network

The components of the cluster are connected via two independent networks, a management network (Ethernet and IPMI) and an Infiniband fabric for MPI communication and storage access. The Infiniband backbone is a fully non-blocking fabric with 200 Gb/s data speed. The compute nodes are connected with different data speeds according to the node configuration.