HPC Glossary/Batch system

← This page is part of the HPC Glossary

When we speak of a batch system on compute clusters, we mean the system that knows which compute nodes are used by whom and when they will become available. It also knows about all waiting jobs and determines which job are going to start next on which node whenever a node bekomes available.

Why do we need a Resource Management System?

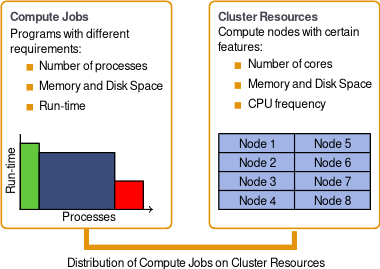

An HPC cluster is a multi-user system. Users have compute jobs with different demands on number of processor cores, memory, disk space and run-time. Some users run a program only occasionally for a big task, other users must run many simulations to finish their projects.

The cluster only provides a limited number of compute resources with certain features. Free access for all users to all compute nodes without time limit will not work. Therefore we need a resource management system (batch system) for the scheduling and the distribution of compute jobs on suitable compute resources. The use of a resource management system pursues several objectives:

- Fair distribution of resources among users

- Compute jobs should start as soon as possible

- Full load and efficient usage of all resources

How does a Resource Management System work?

A resource management system or batch system manages the compute nodes, jobs and queues and basically consists of two components:

- A resource manager which is responsible for the node status and for the distribution of jobs over the compute nodes.

- workload manager (scheduler) which is in charge of job scheduling, job managing, job monitoring and job reporting.

A resource management system works as follows:

- The user creates a job script containing requests for compute resources and submits the script to the resource management system.

- The scheduler parses the job script for resource requests and determines where to run the job and how to schedule it.

- The scheduler delegates the job to the resource manager.

- The resource manager executes the job and communicates the status information to the scheduler.

How does a Job Scheduler work?

The job scheduling process is influenced by many and sometimes contrasting parameters which are used as metrics for the scheduling algorithm. The objectives of a resource management system are approached in the following way:

- Fair distribution of resources among users: Ensure that all users get a fair share of processing time for their jobs.

- Compute jobs should start as soon as possible: Minimize the time jobs have to wait until they start.

- Full load and efficient usage of all resources: Aim for the highest possible utilization with the available jobs, because cluster resources are very expensive

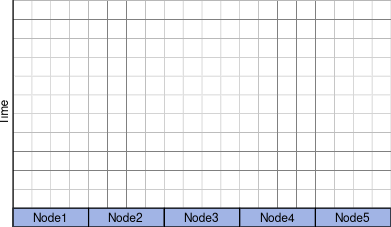

The following simple example illustrates how a scheduler works. Let us consider a system with 4 nodes. Each node has 4 processor cores.

Here the cluster is empty. No jobs are scheduled:

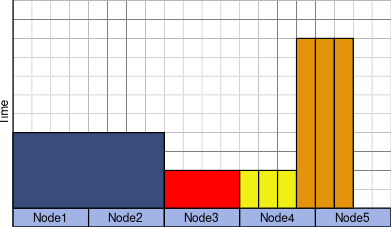

Now jobs with different resource requests are scheduled:

- one job which needs two nodes (blue)

- one job which needs one node (red)

- multiple jobs, which need only one core (yellow, orange)

- some jobs are short running, others are long running (see time axis)

Up to now enough resources are available so that all jobs can start instantly.

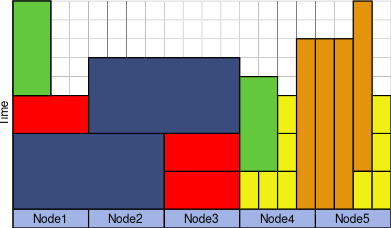

More jobs are submitted. Now not all job can start immediately on the available hardware resources. Some jobs are scheduled to run at a later time.

- Big jobs (in terms of hardware resources) often have to wait longer, because they cannot be scheduled flexibly.

- Long running jobs can delay the scheduling of big jobs, because they block needed hardware resources.

- Small and short jobs can be scheduled to fill gaps.

- If a job stops prematurely, short jobs can be rescheduled to start earlier (back filling).

In addition to cores and time a scheduler has to consider many more metrics like memory, co-processors, fair share, and priority. Scheduling is a multi-dimensional optimization problem.

Resource Management Systems on bwHPC Clusters

Slurm: complete resource management system with integrated resource manager and scheduler All bwHPC cluster follow a fairshare policy. The waiting time of jobs depends on

- your job's resource requests: Jobs with large resource requests wait longer for free resources.

- your usage history: High resource usage in a short time leads to a lower job priority.

- your university's share (bwUniCluster only): If the usage exceeds the university's share, the job priority decreases.

bwHPC clusters have specific configurations for the resource management system concerning:

- Job submission and monitoring commands (via Slurm)

- Queues and limits for the available hardware

- Node access policy:

- shared: compute nodes can be shared by jobs of different users

- single user: compute nodes can be shared by jobs of a single user

- single job: compute nodes are allocated exclusively for a single job

FAQ: How many jobs can run on a single node at the same time?

This depends on the node access policy:

- On "shared nodes", more than one job can run simultaneously if the requested resources are available, and these jobs may have been submitted by different users.

- On "single user nodes", more than one job can run simultaneously, but the jobs have to be submitted by one and the same user

- On "single job nodes", the access is exclusive for one job, irrespective of the number of requested cores.