JUSTUS2/Hardware: Difference between revisions

K Siegmund (talk | contribs) |

K Siegmund (talk | contribs) |

||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{Justus2}} |

{{Justus2}} |

||

= System Architecture = |

= System Architecture = |

||

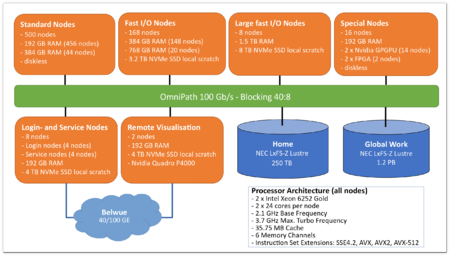

The HPC cluster is composed of [[JUSTUS2/login nodes|login nodes]], compute nodes and parallel storage systems connected by fast data networks. It is connected to the Internet via Baden Württemberg's extended LAN [https://www.belwue.de/ BelWü] (light blue). |

|||

The bwForCluster JUSTUS 2 is a state-wide high-performance compute resource dedicated to Computational Chemistry and Quantum Sciences. |

|||

{| style="margin: 1em auto 1em auto;" |

{| style="margin: 1em auto 1em auto;" |

||

|[[Image:JUSTUS2_Architecture.png|thumb|upright=1.5|right|Overview on JUSTUS 2 hardware architecture. All nodes are additionally connected by 1GB Ethernet. ]] |

|[[Image:JUSTUS2_Architecture.png|thumb|upright=1.5|right|Overview on JUSTUS 2 hardware architecture. All nodes are additionally connected by 1GB Ethernet. ]] |

||

|} |

|} |

||

Users log in on one of the four login nodes and have access to their home and working directories (darker blue) stored in the parallel file system [[Lustre]]. |

|||

Two additional special login visualization node enable users to visualize compute results directly on the cluster. |

|||

Calculations are done on the several types of compute nodes (top), which are accessed via the batch queuing system [[Slurm JUSTUS 2| Slurm ]]. |

|||

== Operating System and Software == |

== Operating System and Software == |

||

* Operating System: [https:// |

* Operating System: [https://rockylinux.org Rocky Linux 8] |

||

* Queuing System: [[Slurm JUSTUS 2| Slurm ]] (also see: [[bwForCluster JUSTUS 2 Slurm HOWTO|Slurm HOWTO (JUSTUS 2)] |

* Queuing System: [[Slurm JUSTUS 2| Slurm ]] (also see: [[bwForCluster JUSTUS 2 Slurm HOWTO|Slurm HOWTO (JUSTUS 2)]]) |

||

* [[Software_Modules_Lmod|Environment Modules] |

* [[Software_Modules_Lmod|Environment Modules]] for site specific scientific applications, developer tools and libraries |

||

== Common Hardware Features == |

== Common Hardware Features == |

||

The system consists of 702 nodes (692 compute |

The system consists of 702 nodes (692 compute nodes and 10 dedicated login, service and visualization nodes) with 2 processors each and a total of 33,696 processor cores. |

||

* Processor: 2 x Intel Xeon 6252 Gold (Cascade Lake, 24-core, 2.1 GHz) |

* Processor: 2 x Intel Xeon 6252 Gold (Cascade Lake, 24-core, 2.1 GHz) |

||

* Two processors per node (2 x 24 cores) |

* Two processors per node (2 x 24 cores) |

||

* [ |

* [https://en.wikipedia.org/wiki/Omni-Path Omni-Path] 100 Gbit/s interconnect |

||

== Node Specifications == |

== Node Specifications == |

||

| Line 77: | Line 84: | ||

|} |

|} |

||

Core numbers: [https://en.wikipedia.org/wiki/Hyper-threading hyperthreading] is enabled, so 48 core-nodes appear to have 96 cores. |

|||

'''Note:''' The special nodes with FPGA are not yet available. |

|||

== Storage Architecture == |

== Storage Architecture == |

||

| Line 200: | Line 207: | ||

On the login nodes $TMPDIR and $SCRATCH point to a local scratch directory on that node. This is located at /scratch/<username> and is also not shared across nodes. The data stored in there is private but will be deleted automatically if not accessed for 7 consecutive days. Like any other local scratch space, the data stored in there is NOT included in any backup. |

On the login nodes $TMPDIR and $SCRATCH point to a local scratch directory on that node. This is located at /scratch/<username> and is also not shared across nodes. The data stored in there is private but will be deleted automatically if not accessed for 7 consecutive days. Like any other local scratch space, the data stored in there is NOT included in any backup. |

||

[[Category:BwForCluster_JUSTUS_2]][[Category:Hardware_and_Architecture|bwForCluster_JUSTUS2]] |

|||

Latest revision as of 14:45, 16 April 2024

The bwForCluster JUSTUS 2 is a state-wide high-performance compute resource dedicated to Computational Chemistry and Quantum Sciences in Baden-Württemberg, Germany.

System Architecture

The HPC cluster is composed of login nodes, compute nodes and parallel storage systems connected by fast data networks. It is connected to the Internet via Baden Württemberg's extended LAN BelWü (light blue).

Users log in on one of the four login nodes and have access to their home and working directories (darker blue) stored in the parallel file system Lustre.

Two additional special login visualization node enable users to visualize compute results directly on the cluster.

Calculations are done on the several types of compute nodes (top), which are accessed via the batch queuing system Slurm .

Operating System and Software

- Operating System: Rocky Linux 8

- Queuing System: Slurm (also see: Slurm HOWTO (JUSTUS 2))

- Environment Modules for site specific scientific applications, developer tools and libraries

Common Hardware Features

The system consists of 702 nodes (692 compute nodes and 10 dedicated login, service and visualization nodes) with 2 processors each and a total of 33,696 processor cores.

- Processor: 2 x Intel Xeon 6252 Gold (Cascade Lake, 24-core, 2.1 GHz)

- Two processors per node (2 x 24 cores)

- Omni-Path 100 Gbit/s interconnect

Node Specifications

The nodes are tiered in terms of hardware configuration (amount of memory, local NVMe, hardware accelerators) in order to be able to serve a large range of different job requirements flexibly and efficiently.

| Standard Nodes | Fast I/O Nodes | Large fast I/O Nodes | Special Nodes | Login- and Service Nodes | Visualization Nodes | |

|---|---|---|---|---|---|---|

| Quantity | 456 / 44 | 148 / 20 | 8 | 14 / 2 | 8 | 2 |

| CPU Type | 2 x Intel Xeon E6252 Gold (Cascade Lake) | |||||

| CPU Frequency | 2.1 GHz Base Frequency, 3.7 GHz Max. Turbo Frequency | |||||

| Cores per Node | 48 | |||||

| Accelerator | --- | 2 x Nvidia V100S / FPGA | --- | Nvidia Quadro P4000 Graphics | ||

| Memory | 192 GB / 384 GB | 384 GB / 768 GB | 1536 GB | 192 GB | ||

| Local SSD | --- | 2 x 1.6 TB NVMe (RAID 0) | 5 x 1.6 TB NVMe (RAID 0) | --- | 2 x 2.0 TB NVMe (RAID 1) | |

| Interconnect | Omni-Path 100 | |||||

Core numbers: hyperthreading is enabled, so 48 core-nodes appear to have 96 cores.

Storage Architecture

The bwForCluster JUSTUS 2 provides of two independent distributed parallel file systems, one for the user's home directories $HOME and another one for global workspaces. This storage architecture is based on Lustre and can be accessed in parallel from any nodes. Additionally, some compute nodes (fast I/O nodes) provide locally attached NVMe storage devices for I/O demanding applications.

| $HOME | Workspace | $SCRATCH | $TMPDIR | |

|---|---|---|---|---|

| Visibility | global | global | node local | node local |

| Lifetime | permanent | workspace lifetime (max. 90 days, extension possible) | batch job walltime | batch job walltime |

| Total Capacity | 250 TB | 1200 TB | 3000 GB / 7300 GB per node | max. half of RAM per node |

| Disk Quotas | 400 GB per user | 20 TB per user | none | none |

| File Quotas | 2.000.000 files per user | 5.000.000 files per user | none | none |

| Backup | yes | no | no | no |

global : accessible from all nodes local : accessible from allocated node only permanent : files are stored permanently (as long as user can access the system) batch job walltime : files are removed at end of the batch job

Note: Disk and file quota limits are soft limits and are subject to change. Quotas feature a grace period where users may exceed their limits to some extent (currently 20%) for a brief period of time (currently 4 weeks).

$HOME

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis.

Current disk usage on home directory and quota status can be checked with the command lfs quota -h -u $USER /lustre/home.

Note: Compute jobs on nodes must not write temporary data to $HOME. Instead they should use the local $SCRATCH or $TMPDIR directories for very I/O intensive jobs and workspaces for less I/O intensive jobs.

Workspaces

Workspaces can be generated through the workspace tools. This will generate a directory with a limited lifetime on the parallel global work file system. When this lifetime is reached the workspace will be deleted automatically after a grace period. Users will be notified by daily e-mail reminders starting 7 days before expiration of a workspace. Workspaces can (and must) be extended to prevent deletion at the expiration date.

Defaults and maximum values

| Default lifetime (days) | 7 |

| Maximum lifetime | 90 |

| Maximum extensions | unlimited |

Examples

| Command | Action |

|---|---|

| ws_allocate my_workspace 30 | Allocate a workspace named "my_workspace" for 30 days. |

| ws_list | List all your workspaces. |

| ws_find my_workspace | Get absolute path of workspace "my_workspace". |

| ws_extend my_workspace 30 | Set expiration date of workspace "my_workspace" to 30 days (regardless of remaining days). |

| ws_release my_workspace | Manually erase your workspace "my_workspace" and release used space on storage (remove data first for immediate deletion of the data). |

Current disk usage on workspace file system and quota status can be checked with the command lfs quota -h -u $USER /lustre/work.

Note: The parallel work file system works optimal for medium to large file sizes and non-random access patterns. Large quantities of small files significantly decrease IO performance and must be avoided. Consider using local scratch for these.

$SCRATCH and $TMPDIR

On compute nodes the environment variables $SCRATCH and $TMPDIR always point to local scratch space that is not shared across nodes.

$TMPDIR always points to a directory on a local RAM disk which will provide up to 50% of the total RAM capacity of the node. Thus, data written to $TMPDIR will always count against allocated memory.

$SCRATCH will point to a directory on locally attached NVMe devices if (and only if) local scratch has been explicitly requested at job submission (i.e. with --gres=scratch:nnn option). If no local scratch has been requested at job submission $SCRATCH will point to the very same directory as $TMPDIR (i.e. to the RAM disk).

On the login nodes $TMPDIR and $SCRATCH point to a local scratch directory on that node. This is located at /scratch/<username> and is also not shared across nodes. The data stored in there is private but will be deleted automatically if not accessed for 7 consecutive days. Like any other local scratch space, the data stored in there is NOT included in any backup.