BwUniCluster3.0/Data Migration Guide: Difference between revisions

| Line 123: | Line 123: | ||

''' 3. Migrate the data ''' |

''' 3. Migrate the data '''<br> |

||

The easiest way to get a suitable rsync command that fits your needs is to use the output of <code>migrate_data_uc2_uc3.sh</code> and eventually adding further <code>--exclude</code> statements. |

|||

''' 4. Migrate workspace data '''<br> |

''' 4. Migrate workspace data '''<br> |

||

Revision as of 14:00, 4 February 2025

Summary of changes

bwUniCluster 3.0 is located on the North Campus in order to meet the requirements of energy-efficient and environmentally more friendly HPC operation via the hot water cooling available there. bwUniCluster 3.0 has a new file system.

Hardware

Policy

Software

Data Migration

bwUniCluster 3.0 features a completely new file system, there is no automatic migration of user data! Users have to actively migrate the data to the new file system. During a limited duration of time (approx. 3 months after commissioning), however, the old file system remains in operation and is mounted on the new system. This makes the data transfer relatively quick and easy. Please be aware of the new, slightly more stringent quota policies! Before the data can be copied, the new quotas must be checked to see if they are sufficient to accept the old data. If there are any quota issues, users should take a look at their respective data lifecycle management.

Assisted data migration

To facilitate the transfer of data between the old and new HOME directories and workspaces, we provide a script that guides you through the copy process or even automates the transfer: migrate_data_uc2_uc3.sh

In order to mitigate the effects of the quota changes, the script first performs a quota check. If a quota check detects that the storage capacity or the number of inodes has been exceeded, the program terminates with an error message.

If the quota check was without objection, the data migration command is displayed. For the fearless, the -x flag can even be used to initiate the copy process itself.

The script can automate the data transfer to the new HOME directory. If you intend to also transfer data resident in workspaces, the script can automate this, too. However, the target workspaces on the new system first have to be setup manually!

Options of the script

-hprovides detailed information about its usage-vprovides verbose output including the quota checks-xwill execute the migration, if the quota checks did not fail

If the data migration fails due to time limit or if you do not intend to do the data transfer interactively, the help message (-h) provides an example on how to do the data transfer via a batch job. This even accelerates the copy process due to the exclusive usage of a compute node.

Attention

We explicitly want the users to NOT migrate their old dot files and dot directories, which possibly contain settings not compatible with the new system (.bashrc, .config/, ...). The script therefore excludes these files from migration. We recommend that you start with a new set of default configuration files and adapt them to your needs as required. Please compare section Migration of Software and settings.

Examples

- Getting the help text

migrate_data_uc2_uc3.sh -hmigrate_data_uc2_uc3.sh [-h|--help] [-d|--debug] [-x|--execute] [-f|--force] [-v|--verbose] [-w|--workspace <name>]

Without options this script will print the recommended rsync command which can be used to copy data

from the home directory of bwUniCluster 2.0 to bwUniCluster3.0. You can either select different

rsync options (see "man rsync" for explanations) or start the script again with the option "-x"

in oder to execute the rsync command. Note that the recommended options exclude files and directories

on the old home directory path which start with a dot, for example .bashrc. This is done because

these files and directories typically include configuration and cache data which is probably different

on the new system. If these dot files and directories include data which is still needed you should

migrate it manually.

The script can also be used to migrate the data of a workspace, see option "-w". Here the option

"-x" is only alloed if the old and the new workspace has the same name. If you want to modify the

name of the old workspace just use the printed rsync command and select an appropriate target directory.

Note that you have to create the new workspace beforehand.

You should start the script inside a batch job since limits on the login node will otherwise probably

abort the actions and since the login node will otherwise be overloaded.

Example for starting the batch job:

sbatch -p cpu -N 1 -t 24:00:00 --mem=30gb /pfs/data6/scripts/migrate_data_uc2_uc3.sh -x

Options:

-d|--debug Provide debug messages.

-f|--force Continue if capacity or inode usage on old file system are higher than

quota limits on new file system.

-h|--help This help.

-x|--execute Execute rsync command. If this option is not set only print rsync command to terminal.

-v|--verbose Provide verbose messages.

-w|--workspace <name> Do rsync for the workspace <name>. If this option is not set do it for your home directory.

- Give verbose hints (quota OK)

migrate_data_uc2_uc3.sh -vDoing the actions for the home directoy. Checking if capacity and inode usage on the old home file system is lower than the limits on the new file system. ✅ Quota checks for capacity and inode usage of the home directoy have passed. Recommended command line for the rsync command: rsync -x --numeric-ids -S -rlptoD -H -A --exclude='/.*' /pfs/data5/home/kit/scc/ab1234/ /home/ka/ka_scc/ka_ab1234/

- Give verbose hints (quota not OK)

migrate_data_uc2_uc3.sh -vDoing the actions for the home directoy. Checking if capacity and inode usage on the old home file system is lower than the limits on the new file system. ❌ Exiting because old capacity usage (563281380) is higher than new capacity limit (524288000). Please remove data of your old home directory (/pfs/data5/home/kit/scc/ab1234). You can also use the force option if you believe that the new limit is sufficient.

Manual migration

If the guided migration fails due to quota issues, users have to reduce the number of inodes or the amount of data. A manual check of the consumed resources is helpful.

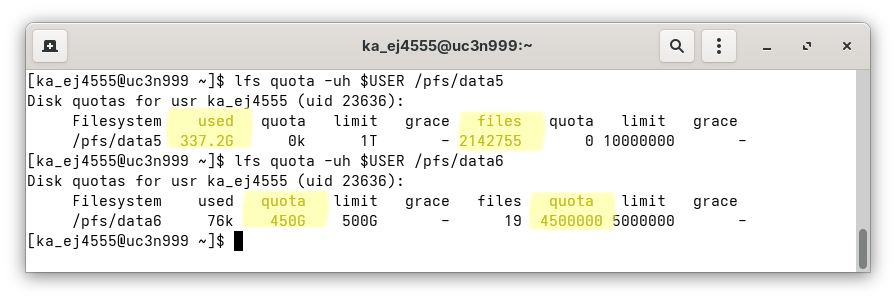

1. Check the Quota of HOME

Show user quota of the old $HOME:

$ lfs quota -uh $USER /pfs/data5

Show user quota of the new $HOME:

$ lfs quota -uh $USER /pfs/data6

For the new file system, the limit for capacity and inodes must be higher than the capacity and the number of inodes used in the old file system in order to avoid I/O errors during data transfer. Pay attention to the respective used, files, and quota column of the outputs.

2. Cleanup

If the capacity limit or the maximum number of inodes is exceeded, now is the right time to clean up.

Either delete data in the source directory before the rsync command or use additional --exclude statements during rsync.

Hint:

If the inode limit is exceeded, you should, for example, delete all existing Python virtual environments, which often contain a massive number of small files and which are not functional on the new system anyway.

3. Migrate the data

The easiest way to get a suitable rsync command that fits your needs is to use the output of migrate_data_uc2_uc3.sh and eventually adding further --exclude statements.

4. Migrate workspace data

Show user quota of the old workspaces:

$ lfs quota -uh $USER /pfs/work7

Show user quota of the new workspaces:

$ lfs quota -uh $USER /pfs/work9

Migration of Software and Settings

We explicitly exclude all dot files and dot directories (.bashrc, .config/, ...)