NEMO/Hardware: Difference between revisions

mNo edit summary |

|||

| (46 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== System Architecture == |

== System Architecture == |

||

The bwForCluster [https://www.hpc.uni-freiburg.de/ NEMO] is a high-performance compute resource with high speed interconnect. It is intended for compute activities related to research in for researchers from the fields '''N'''euroscience, '''E'''lementary Particle Physics |

The bwForCluster [https://www.hpc.uni-freiburg.de/ NEMO] is a high-performance compute resource with high speed interconnect. It is intended for compute activities related to research in for researchers from the fields '''N'''euroscience, '''E'''lementary Particle Physics, '''M'''icrosystems Engineering and '''M'''aterials Science (NEMO). |

||

{| style="width: 100%; border-spacing: 5px;" |

{| style="width: 100%; border-spacing: 5px;" |

||

| Line 20: | Line 20: | ||

|- |

|- |

||

!scope="column" | Queuing System |

!scope="column" | Queuing System |

||

| [http://docs.adaptivecomputing.com MOAB / Torque] (see [[ |

| [http://docs.adaptivecomputing.com MOAB / Torque] (see [[NEMO/Moab]] for help) |

||

|- |

|- |

||

!scope="column" | (Scientific) Libraries and Software |

!scope="column" | (Scientific) Libraries and Software |

||

| Line 29: | Line 29: | ||

* Operating System: [https://en.wikipedia.org/wiki/CentOS CentOS] Linux 7 (similar to RHEL 7) |

* Operating System: [https://en.wikipedia.org/wiki/CentOS CentOS] Linux 7 (similar to RHEL 7) |

||

* Queuing System: [http://docs.adaptivecomputing.com MOAB / Torque] (see [[ |

* Queuing System: [http://docs.adaptivecomputing.com MOAB / Torque] (see [[NEMO/Moab]] for help) |

||

* (Scientific) Libraries and Software: [[Environment Modules]] |

* (Scientific) Libraries and Software: [[Environment Modules]] |

||

* Own Python Environments: [[Conda]] |

|||

=== Compute and Special Purpose Nodes === |

|||

For researchers from the scientific fields '''N'''euroscience, '''E'''lementary Particle Physics, '''M'''icrosystems Engineering and '''M'''aterials Science the bwForCluster '''NEMO''' offers 908 compute nodes plus several special purpose nodes for login, interactive jobs, visualization and machine learning. |

|||

=== Compute Nodes === |

|||

See [[bwForCluster NEMO Specific Batch Features]] for queuing gpu nodes! |

|||

For researchers from the scientific fields '''N'''euroscience, '''E'''lementary Particle Physics and '''M'''icrosystems Engineering the bwFor Cluster '''NEMO''' offers 748 compute nodes plus several special purpose nodes for login, interactive jobs, etc. |

|||

Node specification: |

|||

{| class="wikitable" style="text-align:center;" |

{| class="wikitable" style="text-align:center;" |

||

|- |

|- |

||

! style="width:10%"| |

! style="width:10%"| |

||

! style="width:10%"| Compute Nodes |

! style="width:10%"| Compute Nodes / Interactive Nodes |

||

! style="width:10%"| Interactive Nodes |

|||

! style="width:10%"| Memory Nodes |

! style="width:10%"| Memory Nodes |

||

! style="width:10%"| |

! style="width:10%"| Login |

||

! style="width:10%"| Visualization Nodes |

|||

! style="width:10%"| AMD + T4 Nodes |

|||

! style="width:10%"| GPU Node |

|||

|- |

|- |

||

!scope="column"| Quantity |

!scope="column"| Quantity |

||

| 900 / 4 interactive |

|||

| 772 |

|||

| 4 med mem / 4 high mem |

|||

| 6 |

|||

| |

| 2 login |

||

| 2 vis |

|||

| 4 |

| 4 |

||

| 1 |

|||

|- |

|- |

||

!scope="column" | Processors |

!scope="column" | Processors |

||

|colspan=" |

|colspan="4" style="text-align:center;" | 2 x [http://ark.intel.com/products/92981/Intel-Xeon-Processor-E5-2630-v4-25M-Cache-2_20-GHz Intel Xeon E5-2630v4 (Broadwell)] |

||

| |

| 2 x [https://www.amd.com/de/products/cpu/amd-epyc-7742 AMD EPYC2 7742] |

||

| 1 x [https://www.amd.com/de/products/cpu/amd-epyc-7551p AMD EPYC 7551P] |

|||

|- |

|- |

||

!scope="column" | Processor Frequency (GHz) |

!scope="column" | Processor Base Frequency/Boost Frequency (all-cores) (GHz) |

||

|colspan=" |

|colspan="4" | 2,2/2,4 |

||

| |

| 2,25/TBD |

||

| 2,0/TBD |

|||

|- |

|- |

||

!scope="column" | Number of Cores per Node |

!scope="column" | Number of Cores per Node |

||

|colspan=" |

|colspan="4" | 20 |

||

| |

| 128 |

||

| 64 (32 phys cores /w enabled SMT) |

|||

|- |

|||

!scope="column" | Accelerator |

|||

|colspan="3" | --- |

|||

|colspan="2" | 1 x [https://www.nvidia.com/en-us/data-center/tesla-t4/ Nvidia T4] |

|||

| 8 x [https://www.nvidia.com/en-us/data-center/tesla-v100/ Nvidia V100 32GiB] |

|||

|- |

|- |

||

!scope="column" | Working Memory DDR4 (GB) |

!scope="column" | Working Memory DDR4 (GB) |

||

| 128 |

|||

| | 256 / 512 |

| | 256 / 512 |

||

|colspan="2" | 128 |

|||

| 16 GB MCDRAM + 96 GB DDR4 |

|||

| 512 |

|||

| 256 |

|||

|- |

|- |

||

!scope="column" | Local |

!scope="column" | Local SSD (GB) |

||

|colspan="4" | 240 |

|colspan="4" | 240 |

||

| 480 |

|||

| 960 |

|||

|- |

|- |

||

!scope="column" | Interconnect |

!scope="column" | Interconnect |

||

|colspan=" |

|colspan="3" | Omni-Path 100 |

||

| --- |

|||

|colspan="2" | Omni-Path 100 |

|||

|- |

|||

!scope="column" | Job examples |

|||

|colspan="2" | -l nodes=x:ppn=20:pmem=6gb |

|||

| ssh login.nemo.uni-freiburg.de (*) |

|||

| ssh vis.nemo.uni-freiburg.de (*) |

|||

| -l nodes=1:ppn=128:pmem=3800mb(:gpus=1) |

|||

| -l nodes=1:ppn=8:pmem=3gb:gpus=1 -q gpu |

|||

|- |

|- |

||

|} |

|} |

||

(*) No job necessary, just ssh to these machines. Available host names: login1 (and alias login), login2, vis1 (and alias vis), vis2. |

|||

NOTE: |

|||

=== Special Purpose Nodes === |

|||

The OS needs memory as well. Only about 120 / 250 / 500 GB RAM are available for jobs. |

|||

Maximum memory specification for standard nodes therefore is '-l pmem=6GB'. |

|||

Besides the classical compute node several nodes serve as login and preprocessing nodes, nodes for interactive jobs and nodes for creating virtual environments providing a virtual service environment. |

|||

[[Category:bwForCluster NEMO]] |

|||

[[Category:Hardware and Architecture|bwForCluster NEMO]] |

|||

== Storage Architecture == |

== Storage Architecture == |

||

The bwForCluster [https://nemo.uni-freiburg.de NEMO] consists of two separate storage systems, one for the user's home directory <tt>$HOME</tt> and one serving as a |

The bwForCluster [https://nemo.uni-freiburg.de NEMO] consists of two separate storage systems, one for the user's home directory <tt>$HOME</tt> and one serving as a workspace. The home directory is limited in space and parallel access but offers snapshots of your files and Backup. The workspace is a parallel file system which offers fast and parallel file access and a bigger capacity than the home directory. This storage is based on [https://www.beegfs.com/ BeeGFS] and can be accessed parallel from many nodes. Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the <tt>$TMPDIR</tt> environment variable. |

||

{| class="wikitable" |

{| class="wikitable" |

||

| Line 93: | Line 117: | ||

! style="width:10%"| |

! style="width:10%"| |

||

! style="width:10%"| <tt>$HOME</tt> |

! style="width:10%"| <tt>$HOME</tt> |

||

! style="width:10%"| |

! style="width:10%"| Workspace |

||

! style="width:10%"| <tt>$TMPDIR</tt> |

! style="width:10%"| <tt>$TMPDIR</tt> |

||

|- |

|- |

||

| Line 103: | Line 127: | ||

!scope="column" | Lifetime |

!scope="column" | Lifetime |

||

| permanent |

| permanent |

||

| |

| workspace lifetime (max. 100 days, extension possible) |

||

| batch job walltime |

| batch job walltime |

||

|- |

|- |

||

!scope="column" | Capacity |

!scope="column" | Capacity |

||

| 45 TB |

| 45 TB |

||

| |

| 960 TB |

||

| 200 GB per node |

| 200 GB per node |

||

|- |

|- |

||

!scope="column" | [https://en.wikipedia.org/wiki/Disk_quota#Quotas Quotas] |

!scope="column" | [https://en.wikipedia.org/wiki/Disk_quota#Quotas Quotas] |

||

| 100 GB per user |

| 100 GB per user |

||

| |

| 10 TB / 4 Million chunks per user (~1 Million files) |

||

| none |

| none |

||

|- |

|- |

||

| Line 147: | Line 171: | ||

Compute jobs on nodes must not write temporary data to $HOME. |

Compute jobs on nodes must not write temporary data to $HOME. |

||

Instead they should use the local $TMPDIR directory for I/O-heavy use cases |

Instead they should use the local $TMPDIR directory for I/O-heavy use cases |

||

and |

and workspaces for less I/O intense multinode-jobs. |

||

| Line 170: | Line 194: | ||

=== |

==== Snapshots ==== |

||

For data stored in your $HOME directory, snapshots are being created. These are copies or states of your $HOME at the time when the snapshot was taken. That way you can restore old states of files and directories. To see the snapshots you have to change into the '.snapshot' directory in any of your directories in $HOME. The '.snapshot' directory is not a real directory, so you won't be able to list (eg. 'ls -la') or see it from the outside. |

|||

Work spaces can be generated through the <tt>workspace</tt> tools. This will generate a directory on the parallel storage with a limited lifetime. When this lifetime is reached the work space will be deleted automatically after a grace period. Work spaces can be extended to prevent deletion. You can create reminders and calendar entries to prevent accidental removal. |

|||

<pre> |

|||

To create a work space you'll need to supply a name for your work space area and a lifetime in days. |

|||

fr_xyz0000@login2 ~> cd .snapshot |

|||

fr_xyz0000@login2 ~/.snapshot> |

|||

fr_xyz0000@login2 ~/.snapshot> cd |

|||

fr_xyz0000@login2 ~> cd test/.snapshot |

|||

fr_xyz0000@login2 ~/test/.snapshot> |

|||

</pre> |

|||

=== Workspaces === |

|||

Workspaces can be generated through the <tt>workspace</tt> tools. This will generate a directory on the parallel storage with a limited lifetime. When this lifetime is reached the workspace will be deleted automatically after a grace period. Workspaces can be extended to prevent deletion. You can create reminders and calendar entries to prevent accidental removal. |

|||

To create a workspace you'll need to supply a name for your workspace area and a lifetime in days. |

|||

For more information read the corresponding help, e.g: <tt>ws_allocate -h</tt>. |

For more information read the corresponding help, e.g: <tt>ws_allocate -h</tt>. |

||

| Line 194: | Line 230: | ||

!style="width:70%" | Action |

!style="width:70%" | Action |

||

|- |

|- |

||

|<tt>ws_allocate |

|<tt>ws_allocate my_workspace 100</tt> |

||

|Allocate a |

|Allocate a workspace named "my_workspace" for 100 days. |

||

|- |

|- |

||

|<tt> |

|<tt>ws_list</tt> |

||

|List all your workspaces. |

|||

|Allocate a work space named "myotherwork" with maximum lifetime. |

|||

|- |

|- |

||

|<tt> |

|<tt>ws_find my_workspace</tt> |

||

|Get absolute path of workspace "my_workspace". |

|||

|List all your work spaces. |

|||

|- |

|- |

||

|<tt> |

|<tt>ws_extend my_workspace 100</tt> |

||

|Set expiration date of workspace "my_workspace" to 100 days (regardless of remaining days). |

|||

|Get absolute path of work space "mywork". |

|||

|- |

|- |

||

|<tt> |

|<tt>ws_release my_workspace</tt> |

||

|Manually erase your workspace "my_workspace" and release used space on storage (remove data first for immediate deletion of the data). |

|||

|Extend life me of work space mywork by 30 days from now. |

|||

|- |

|- |

||

|<tt> |

|<tt>ws_unlock my_workspace</tt> |

||

|Unlock expired workspace "my_workspace" few days after expiration (grace period depends on storage capacity, see <tt>ws_list</tt> for expired workspaces). |

|||

|Manually erase your work space "mywork". Please remove directory content first. |

|||

|- |

|- |

||

|} |

|} |

||

==== Sharing Workspace Data within your Workgroup ==== |

|||

=== Work Space (data sharing) === |

|||

Data in |

Data in workspaces can be shared with colleagues. Making workspaces world readable/writable using standard unix access rights is strongly discouraged. It is recommended to use ACL (Access Control Lists). |

||

Best practices with respect to ACL usage: |

Best practices with respect to ACL usage: |

||

* Take into account that ACL take precedence over standard unix access rights |

* Take into account that ACL take precedence over standard unix access rights |

||

* Use a single set of rules at the level of a |

* Use a single set of rules at the level of a workspace |

||

* Make the entire |

* Make the entire workspace either readonly or readwrite for individual co-workers |

||

* Optional: Make the entire workspace readonly for your project/Rechenvorhaben (group bwYYMNNN), e.g. for large input data |

|||

* If a more granular set of rules is necessary, consider using additional work spaces |

|||

* If a more granular set of rules is necessary, consider using additional workspaces |

|||

* The owner of a work space is responsible for its content and management |

|||

* The owner of a workspace is responsible for its content and management |

|||

* <font color=green>'''If setting ACLs for individual co-workers, set ACLs for your user first, otherwise you won't be able to access the files of your co-workers!'''</font> |

|||

Examples: |

|||

Please note that <tt>ls</tt> (List directory contents) shows ACLs on directories and files only when run as <tt>ls -l</tt> as in long format, as "plus" sign after the standard unix access rights. Use <tt>getfacl</tt> to see ACLs for directories and files. |

|||

Examples with regard to "my_workspace": |

|||

{| class="wikitable" |

{| class="wikitable" |

||

|- |

|- |

||

!style="width: |

!style="width:45%" | Command |

||

!style="width: |

!style="width:55%" | Action |

||

|- |

|- |

||

|<tt>getfacl $(ws_find my_workspace)</tt> |

|<tt>getfacl $(ws_find my_workspace)</tt> |

||

|List access rights on the |

|List access rights on the workspace named "my_workspace" |

||

|- |

|- |

||

|<tt>setfacl -Rm u:fr_xy1001:rX,d:u:fr_xy1001:rX $(ws_find my_workspace)</tt> |

|<tt>setfacl -Rm u:fr_xy1001:rX,d:u:fr_xy1001:rX $(ws_find my_workspace)</tt> |

||

|Grant user "fr_xy1001" read-only access to the |

|Grant user "fr_xy1001" read-only access to the workspace named "my_workspace" |

||

|- |

|- |

||

|<tt>setfacl -Rm u: |

|<tt>setfacl -Rm u:fr_me0000:rwX,d:u:fr_me0000:rwX $(ws_find my_workspace)</tt> |

||

<tt>setfacl -Rm u:fr_xy1001:rwX,d:u:fr_xy1001:rwX $(ws_find my_workspace)</tt> |

|||

|Grant user your user "fr_me0000" and "fr_xy1001" read and write access to the workspace named "my_workspace" (see explanation above) |

|||

|- |

|- |

||

|<tt>setfacl -Rm g:bw16e001:rX,d:g:bw16e001:rX $(ws_find my_workspace)</tt> |

|<tt>setfacl -Rm g:bw16e001:rX,d:g:bw16e001:rX $(ws_find my_workspace)</tt> |

||

|Grant group (Rechenvorhaben) "bw16e001" read-only access to the |

|Grant group (project/Rechenvorhaben) "bw16e001" read-only access to the workspace named "my_workspace" |

||

|- |

|- |

||

|<tt>setfacl - |

|<tt>setfacl -Rm g:bw16e001:rwX,d:g:bw16e001:rwX $(ws_find my_workspace)</tt> |

||

|Grant group (project/Rechenvorhaben) "bw16e001" read and write access to the workspace named "my_workspace" |

|||

|- |

|||

|<tt>setfacl -Rb $(ws_find my_workspace)</tt> |

|||

|Remove all ACL rights. Standard Unix access rights apply again. |

|Remove all ACL rights. Standard Unix access rights apply again. |

||

|- |

|- |

||

|} |

|} |

||

=== Local Disk Space === |

=== Local Disk Space <code>$TMPDIR</code> === |

||

All compute nodes are equipped with a local SSD with 240 GB capacity (usable 200 GB). During computation the environment variable < |

All compute nodes are equipped with a local SSD with 240 GB capacity (usable 200 GB). During computation the environment variable <code>$TMPDIR</code> points to this local disk space. The data will become unavailable as soon as the job has finished. |

||

=== Limits and best practices === |

|||

== High Performance Network == |

|||

To keep the system in a working state for all users, users are asked to respect the limits ("quotas") on the the parallel filesystem, i.e. on workspaces. |

|||

The compute nodes all are interconnected through the high performance network Omni-Path which offers a very small latency and 100 Gbit/s throughput. The parallel storage for the work spaces is attached via Omni-Path to all cluster nodes. For non-blocking communication 17 islands with 44 nodes and 880 cores each are available. The islands are connected with a blocking factor of 1:11 (or 400 Gbit/s for 44 nodes). |

|||

Per user, the following restrictions apply: |

|||

* Restriction on size: 10 Terabytes |

|||

* Restriction on of files chunks: 4.000.000 |

|||

You can check your current usage in the workspaces with the following command: |

|||

nemoquota |

|||

Note that "chunk files" roughly translates to 4 times number of files. |

|||

If you are over quota, please start '''deleting''' files. Just deleting the workspace (with ws_release) or abandoning it (by not extending) '''will not''' reduce your quota: The files in there still exist for a grace period of several days. |

|||

Please note the following best practice using the parallel filesystem (i.e. workspaces): |

|||

* The parallel file system works optimal with medium to large files, large quantities of very small files significantly reduce performance and should be avoided |

|||

* Temporary files should not be kept there. Consider using $TMPDIR for these. This is the SSD local to the compute node. Additional benefit: It's faster... |

|||

* There is no backup for workspaces. Final results you cannot afford to loose should go to your home-directory or (better yet) be archived outside NEMO |

|||

== High Performance Network == |

|||

The compute nodes all are interconnected through the high performance network Omni-Path which offers a very small latency and 100 Gbit/s throughput. The parallel storage for the workspaces is attached via Omni-Path to all cluster nodes. For non-blocking communication 17 islands with 44 nodes and 880 cores each are available. The islands are connected with a blocking factor of 1:11 (or 400 Gbit/s for 44 nodes). |

|||

[[Category:BwForCluster NEMO]] |

|||

[[Category:Hardware and Architecture|bwForCluster NEMO]] |

|||

[[Category:BwForCluster NEMO|Hardware and Architecture]] |

|||

Latest revision as of 15:00, 22 June 2023

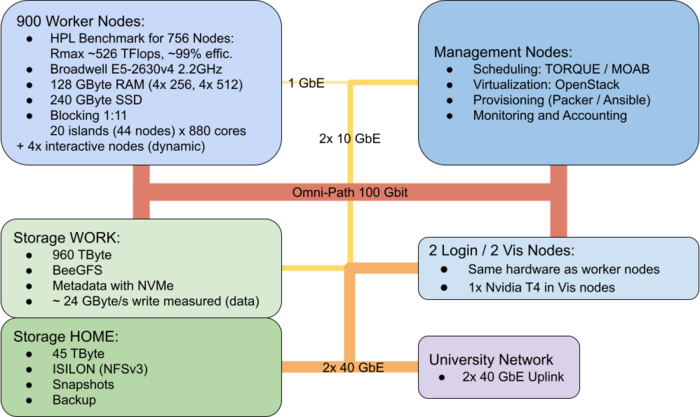

System Architecture

The bwForCluster NEMO is a high-performance compute resource with high speed interconnect. It is intended for compute activities related to research in for researchers from the fields Neuroscience, Elementary Particle Physics, Microsystems Engineering and Materials Science (NEMO).

|

Figure: bwForCluster NEMO Schematic |

Operating System and Software

- Operating System: CentOS Linux 7 (similar to RHEL 7)

- Queuing System: MOAB / Torque (see NEMO/Moab for help)

- (Scientific) Libraries and Software: Environment Modules

- Own Python Environments: Conda

Compute and Special Purpose Nodes

For researchers from the scientific fields Neuroscience, Elementary Particle Physics, Microsystems Engineering and Materials Science the bwForCluster NEMO offers 908 compute nodes plus several special purpose nodes for login, interactive jobs, visualization and machine learning.

See bwForCluster NEMO Specific Batch Features for queuing gpu nodes!

Node specification:

| Compute Nodes / Interactive Nodes | Memory Nodes | Login | Visualization Nodes | AMD + T4 Nodes | GPU Node | |

|---|---|---|---|---|---|---|

| Quantity | 900 / 4 interactive | 4 med mem / 4 high mem | 2 login | 2 vis | 4 | 1 |

| Processors | 2 x Intel Xeon E5-2630v4 (Broadwell) | 2 x AMD EPYC2 7742 | 1 x AMD EPYC 7551P | |||

| Processor Base Frequency/Boost Frequency (all-cores) (GHz) | 2,2/2,4 | 2,25/TBD | 2,0/TBD | |||

| Number of Cores per Node | 20 | 128 | 64 (32 phys cores /w enabled SMT) | |||

| Accelerator | --- | 1 x Nvidia T4 | 8 x Nvidia V100 32GiB | |||

| Working Memory DDR4 (GB) | 128 | 256 / 512 | 128 | 512 | 256 | |

| Local SSD (GB) | 240 | 480 | 960 | |||

| Interconnect | Omni-Path 100 | --- | Omni-Path 100 | |||

| Job examples | -l nodes=x:ppn=20:pmem=6gb | ssh login.nemo.uni-freiburg.de (*) | ssh vis.nemo.uni-freiburg.de (*) | -l nodes=1:ppn=128:pmem=3800mb(:gpus=1) | -l nodes=1:ppn=8:pmem=3gb:gpus=1 -q gpu | |

(*) No job necessary, just ssh to these machines. Available host names: login1 (and alias login), login2, vis1 (and alias vis), vis2.

NOTE: The OS needs memory as well. Only about 120 / 250 / 500 GB RAM are available for jobs. Maximum memory specification for standard nodes therefore is '-l pmem=6GB'.

Storage Architecture

The bwForCluster NEMO consists of two separate storage systems, one for the user's home directory $HOME and one serving as a workspace. The home directory is limited in space and parallel access but offers snapshots of your files and Backup. The workspace is a parallel file system which offers fast and parallel file access and a bigger capacity than the home directory. This storage is based on BeeGFS and can be accessed parallel from many nodes. Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the $TMPDIR environment variable.

| $HOME | Workspace | $TMPDIR | |

|---|---|---|---|

| Visibility | global (GbE) | global (Omni-Path) | node local |

| Lifetime | permanent | workspace lifetime (max. 100 days, extension possible) | batch job walltime |

| Capacity | 45 TB | 960 TB | 200 GB per node |

| Quotas | 100 GB per user | 10 TB / 4 Million chunks per user (~1 Million files) | none |

| Backup | snapshots + tape backup | no | no |

global : all nodes access the same file system local : each node has its own file system permanent : files are stored permanently batch job walltime : files are removed at end of the batch job

$HOME

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. The files in $HOME are stored on a Isilon OneFS and provided via NFS to all nodes.

NOTE: Compute jobs on nodes must not write temporary data to $HOME. Instead they should use the local $TMPDIR directory for I/O-heavy use cases and workspaces for less I/O intense multinode-jobs.

Snapshots

For data stored in your $HOME directory, snapshots are being created. These are copies or states of your $HOME at the time when the snapshot was taken. That way you can restore old states of files and directories. To see the snapshots you have to change into the '.snapshot' directory in any of your directories in $HOME. The '.snapshot' directory is not a real directory, so you won't be able to list (eg. 'ls -la') or see it from the outside.

fr_xyz0000@login2 ~> cd .snapshot fr_xyz0000@login2 ~/.snapshot> fr_xyz0000@login2 ~/.snapshot> cd fr_xyz0000@login2 ~> cd test/.snapshot fr_xyz0000@login2 ~/test/.snapshot>

Workspaces

Workspaces can be generated through the workspace tools. This will generate a directory on the parallel storage with a limited lifetime. When this lifetime is reached the workspace will be deleted automatically after a grace period. Workspaces can be extended to prevent deletion. You can create reminders and calendar entries to prevent accidental removal.

To create a workspace you'll need to supply a name for your workspace area and a lifetime in days. For more information read the corresponding help, e.g: ws_allocate -h.

Defaults and maximum values:

| Default and maximum lifetime (days) | 100 |

| Maximum extensions | 99 |

Examples:

| Command | Action |

|---|---|

| ws_allocate my_workspace 100 | Allocate a workspace named "my_workspace" for 100 days. |

| ws_list | List all your workspaces. |

| ws_find my_workspace | Get absolute path of workspace "my_workspace". |

| ws_extend my_workspace 100 | Set expiration date of workspace "my_workspace" to 100 days (regardless of remaining days). |

| ws_release my_workspace | Manually erase your workspace "my_workspace" and release used space on storage (remove data first for immediate deletion of the data). |

| ws_unlock my_workspace | Unlock expired workspace "my_workspace" few days after expiration (grace period depends on storage capacity, see ws_list for expired workspaces). |

Sharing Workspace Data within your Workgroup

Data in workspaces can be shared with colleagues. Making workspaces world readable/writable using standard unix access rights is strongly discouraged. It is recommended to use ACL (Access Control Lists).

Best practices with respect to ACL usage:

- Take into account that ACL take precedence over standard unix access rights

- Use a single set of rules at the level of a workspace

- Make the entire workspace either readonly or readwrite for individual co-workers

- Optional: Make the entire workspace readonly for your project/Rechenvorhaben (group bwYYMNNN), e.g. for large input data

- If a more granular set of rules is necessary, consider using additional workspaces

- The owner of a workspace is responsible for its content and management

- If setting ACLs for individual co-workers, set ACLs for your user first, otherwise you won't be able to access the files of your co-workers!

Please note that ls (List directory contents) shows ACLs on directories and files only when run as ls -l as in long format, as "plus" sign after the standard unix access rights. Use getfacl to see ACLs for directories and files.

Examples with regard to "my_workspace":

| Command | Action |

|---|---|

| getfacl $(ws_find my_workspace) | List access rights on the workspace named "my_workspace" |

| setfacl -Rm u:fr_xy1001:rX,d:u:fr_xy1001:rX $(ws_find my_workspace) | Grant user "fr_xy1001" read-only access to the workspace named "my_workspace" |

| setfacl -Rm u:fr_me0000:rwX,d:u:fr_me0000:rwX $(ws_find my_workspace)

setfacl -Rm u:fr_xy1001:rwX,d:u:fr_xy1001:rwX $(ws_find my_workspace) |

Grant user your user "fr_me0000" and "fr_xy1001" read and write access to the workspace named "my_workspace" (see explanation above) |

| setfacl -Rm g:bw16e001:rX,d:g:bw16e001:rX $(ws_find my_workspace) | Grant group (project/Rechenvorhaben) "bw16e001" read-only access to the workspace named "my_workspace" |

| setfacl -Rm g:bw16e001:rwX,d:g:bw16e001:rwX $(ws_find my_workspace) | Grant group (project/Rechenvorhaben) "bw16e001" read and write access to the workspace named "my_workspace" |

| setfacl -Rb $(ws_find my_workspace) | Remove all ACL rights. Standard Unix access rights apply again. |

Local Disk Space $TMPDIR

All compute nodes are equipped with a local SSD with 240 GB capacity (usable 200 GB). During computation the environment variable $TMPDIR points to this local disk space. The data will become unavailable as soon as the job has finished.

Limits and best practices

To keep the system in a working state for all users, users are asked to respect the limits ("quotas") on the the parallel filesystem, i.e. on workspaces.

Per user, the following restrictions apply:

- Restriction on size: 10 Terabytes

- Restriction on of files chunks: 4.000.000

You can check your current usage in the workspaces with the following command:

nemoquota

Note that "chunk files" roughly translates to 4 times number of files.

If you are over quota, please start deleting files. Just deleting the workspace (with ws_release) or abandoning it (by not extending) will not reduce your quota: The files in there still exist for a grace period of several days.

Please note the following best practice using the parallel filesystem (i.e. workspaces):

- The parallel file system works optimal with medium to large files, large quantities of very small files significantly reduce performance and should be avoided

- Temporary files should not be kept there. Consider using $TMPDIR for these. This is the SSD local to the compute node. Additional benefit: It's faster...

- There is no backup for workspaces. Final results you cannot afford to loose should go to your home-directory or (better yet) be archived outside NEMO

High Performance Network

The compute nodes all are interconnected through the high performance network Omni-Path which offers a very small latency and 100 Gbit/s throughput. The parallel storage for the workspaces is attached via Omni-Path to all cluster nodes. For non-blocking communication 17 islands with 44 nodes and 880 cores each are available. The islands are connected with a blocking factor of 1:11 (or 400 Gbit/s for 44 nodes).