Development/General compiler usage: Difference between revisions

K Siegmund (talk | contribs) No edit summary |

H Winkhardt (talk | contribs) (UC3 Betriebsmodell MPI) |

||

| (90 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{| width=600px class="wikitable" |

|||

The basic operations can be performed with the same commands for all available compilers. For advanced usage such as optimization and profiling you should consult the best practice guide of the compiler you intend to use ([[BwHPC_BPG_Compiler#GCC|GCC]], [[BwHPC_BPG_Compiler#Intel Suite|Intel Suite]]). To get a list of the compilers installed on the system execute |

|||

|- |

|||

<pre>$ module avail compiler</pre> |

|||

! Description !! Content |

|||

Both Intel and GCC have compilers for different languages which will be available after the module is loaded. |

|||

|- |

|||

| module load |

|||

| compiler/gnu or compiler/intel or compiler/llvm and others... |

|||

|- |

|||

| License |

|||

| [[Development/Intel_Compiler|Intel]]: Commercial | [[Development/GCC|GNU]]: GPL | LLVM: Apache 2 | PGI/NVIDIA: Commercial |

|||

|} |

|||

= Description = |

|||

Basically, compilers translate human-readable source code (e.g. C++ interpreted as adhering to ISO/IEC 14882:2014, encoded in UTF-8 text) into binary byte code (e.g. x86-64 with Linux ABI in ELF-format). |

|||

Compilers are complex software and have become very powerful in the last decades, to '''guide''' you as a programmer writing better, more portable, more performant programs. Use the compiler as a tool -- and best use multiple compilers on the same source code for best results. |

|||

The basic operations and hints can be performed with the same or similar commands on all available compilers. For advanced usage such as optimization and profiling you should consult the best practice guide of the compiler you intend to use ([[Development/GCC|GCC]], [[Development/Intel_Compiler|Intel Suite]]). |

|||

More information about the MPI versions of the GNU and Intel Compilers is available here: |

|||

* [[Development/Parallel_Programming|Best Practices Guide for Parallel Programming]]. |

|||

= Loading compilers as modules = |

|||

Modules and loading of modules is described in general in [[Environment_Modules|here for traditional Environment Modules]] and about the Lmod implementation of Environment modules in [[Software_Modules_Lmod|here for Lmod]]. |

|||

Modules need to be mentioned, since on any system there's a pre-installed set of compilers (for C, C++ and usually Fortran) -- the so-called system compilers -- provided by the Linux distribution. The system compiler however may lack certain options for optimization, are typically optimizing for older and more architectures (think SSE vs. AVX2 and AVX-512/AVX10.2). Also they may lack useful warnings or other features. On RedHat Enterprise Linux 9.4 this is GNU compiler v11.4.1. |

|||

Be advised to check out the newer compilers available as modules. |

|||

Since Fortran (and very old C++) requires compiling and linking libraries with the very same compiler, many libraries, first-and-foremost the MPI libraries need to be provided for specific versions of a compiler. |

|||

On some clusters, these provided libraries will only be visible to <kbd>module avail</kbd>, once a compiler is loaded. |

|||

Hence, check out loading |

|||

<pre> |

|||

$ module avail compiler/intel |

|||

... |

|||

$ module load compiler/intel/2023.1.0 |

|||

... |

|||

$ module avail |

|||

</pre> |

|||

to see the available MPI modules. |

|||

All vendors whether it's Intel, GNU, LLVM or the Nvidia toolkit (see module group toolkit) have compilers for different programming languages which will be available |

|||

only after loading the module. |

|||

== Linux Default Compiler == |

|||

The default Compiler installed on all compute nodes is the GNU Compiler Collection (GCC) or in short GNU compiler. |

|||

* Don't get distracted with the available compiler modules. |

|||

* Only the modules are loading the complete environments needed. |

|||

<u>Example</u> |

|||

<pre> |

|||

$ module purge # unload all modules |

|||

$ module list # check which modules are loaded, we expect none |

|||

No Modulefiles Currently Loaded. |

|||

$ gcc --version # see version of default Linux GNU compiler |

|||

gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-18) |

|||

[...] |

|||

$ module load compiler/gnu # load default GNU compiler module |

|||

$ module list # check which modules are loaded, we expect the default GNU compiler |

|||

Currently Loaded Modulefiles: |

|||

1) compiler/gnu/13.3 |

|||

$ gcc --version # now, check the current (loaded) version of the GNU C compiler |

|||

gcc (GCC) 13.3.0 |

|||

[...] |

|||

</pre> |

|||

= Synoptical Tables = |

|||

== Compilers (no MPI) == |

|||

{| width=600px class="wikitable" |

|||

{| style="width:30%; vertical-align:top;border:1px solid #000000;padding:1px;margin:5px;border-collapse:collapse" border="1" |

|||

|- |

|- |

||

! Compiler Suite |

! Compiler Suite |

||

| Line 9: | Line 75: | ||

! Command |

! Command |

||

|- |

|- |

||

| style="vertical-align:top;" rowspan="3" | <font color=green><big>Intel Composer (pre-OneAPI)</big></font><br><br> [[Development/Intel_Compiler|• Best Practice Guides on Intel Compiler Software]] |

|||

|style="padding:3px" rowspan="3" | Intel Composer |

|||

| C |

|||

|style="padding:3px"|C |

|||

| icc |

|||

|style="padding:3px"| icc |

|||

|- |

|- |

||

| C++ |

|||

|style="padding:3px"|C++ |

|||

| icpc |

|||

|style="padding:3px"|icpc |

|||

|- |

|- |

||

| |

| Fortran |

||

| |

| ifort |

||

|- |

|||

| style="vertical-align:top;" rowspan="3" | <font color=green><big>Intel OneAPI (llvm-based)</big></font><br><br> [[Development/Intel_Compiler|• Best Practice Guides on Intel Compiler Software]] |

|||

| C |

|||

| icx |

|||

|- |

|- |

||

| C++ |

|||

|style="padding:3px" rowspan="3" | GCC |

|||

| icpx |

|||

|style="padding:3px"|C |

|||

|style="padding:3px"|gcc |

|||

|- |

|- |

||

| Fortran |

|||

|style="padding:3px"|C++ |

|||

| ifx |

|||

|style="padding:3px"|g++ |

|||

|- |

|||

| style="vertical-align:top;" rowspan="3" | <font color=green><big>GCC</big></font><br><br> [[Development/GCC|• Best Practice Guides on GNU Compiler Software]] |

|||

| C |

|||

| gcc |

|||

|- |

|- |

||

| C++ |

|||

|style="padding:3px"|Fortran |

|||

| g++ |

|||

|style="padding:3px"| gfortran |

|||

|- |

|||

| Fortran |

|||

| gfortran |

|||

|- |

|||

| style="vertical-align:top;" rowspan="3" | <font color=green><big>LLVM</big></font> |

|||

| C |

|||

| clang |

|||

|- |

|||

| C++ |

|||

| clang++ |

|||

|- |

|||

| Fortran 77/90 |

|||

| flang |

|||

|- |

|||

| style="vertical-align:top;" rowspan="3" | <font color=green><big>PGI/NVIDIA</big></font> |

|||

| C |

|||

| pgcc |

|||

|- |

|||

| C++ |

|||

| pgCC |

|||

|- |

|||

| Fortran 77/90 |

|||

| pgf77 or pgf90 |

|||

|} |

|} |

||

== MPI compiler and Underlying Compilers == |

|||

The following compiler commands work for all the compilers in the list above even though the examples will be for icc only. When ex.c is a C source code file such as |

|||

<source lang=C style="font: normal normal 2em monospace"> |

|||

MPI implementations such as MPIch, Intel-MPI (derived from MPIch) or Open MPI provide compiler wrappers, easing the usage of MPI by providing the Include-Directory <kbd>-I</kbd> and required libraries as well as the implementation's library directory flag <kbd>-L</kbd> for linking. |

|||

#include <stdio.h> |

|||

The following table lists available MPI compiler commands and the underlying compilers, compiler families, languages, and application binary interfaces (ABIs) that they support. |

|||

int main() { |

|||

printf("Hello world\n"); |

|||

{| width=600px class="wikitable" |

|||

return 0; |

|||

|- |

|||

} |

|||

! MPI Compiler Command !! Default Compiler !! Supported Language(s) !! Supported ABI's |

|||

</source> |

|||

|- |

|||

it can be compiled and linked with the single command |

|||

| colspan=4 style="background-color:#DCDCDC;" | Generic Compilers |

|||

<pre>$ icc ex.c -o ex</pre> |

|||

|- |

|||

to produce an executable named ex. |

|||

| mpicc || gcc, cc || C || 32/64 bit |

|||

|- |

|||

| mpicxx || g++ || C/C++ || 32/64 bit |

|||

|- |

|||

| mpifc || gfortran || Fortran77/Fortran 95 || 32/64 bit |

|||

|- |

|||

| colspan=4 style="background-color:#DCDCDC;" | [[Development/GCC|GNU Compiler]] Versions 3 and higher |

|||

|- |

|||

| mpigcc || gcc || C || 32/64 bit |

|||

|- |

|||

| mpigxx || g++ || C/C++ || 32/64 bit |

|||

|- |

|||

| mpif77 || g77 || Fortran 77 || 32/64 bit |

|||

|- |

|||

| mpif90 || gfortran || Fortran 95 || 32/64 bit |

|||

|- |

|||

| colspan=4 style="background-color:#DCDCDC;" | [[Development/Intel_Compiler|Intel Fortran, C++ Compilers]] Versions 13.1 through 14.0 and Higher |

|||

|- |

|||

| mpiicc || icc || C || 32/64 bit |

|||

|- |

|||

| mpiicpc || icpc || C++ || 32/64 bit |

|||

|- |

|||

|impiifort || ifort || Fortran77/Fortran 95 || 32/64 bit |

|||

|- |

|||

|} |

|||

= How to use = |

|||

The following compiler commands work for all the compilers in the list above even though |

|||

the examples will be for '''icc''' only. |

|||

== Commands == |

|||

The typical introduction is a "Hello World" program. The following C source code shows best practices: |

|||

<source lang="c"> |

|||

#include <stdio.h> // for printf |

|||

#include <stdlib.h> // for EXIT_SUCCESS and EXIT_FAILURE |

|||

int main (int argc, char * argv[]) { // std. definition of a program taking arguments |

|||

printf("Hello World\n"); // Unix Output is line-buffered, end line with New-line. |

|||

return EXIT_SUCCESS; // End program by returning 0 (No Error) |

|||

}</source> |

|||

After loading the Intel Compiler module using <kbd>module load compiler/intel/</kbd>, the source may be compiled and linked with the single command |

|||

<pre>$ icc hello.c -o hello</pre> |

|||

to produce an executable named <kbd>hello</kbd>. This may be executed using the command <kbd>./hello</kbd>. |

|||

This process can be divided into two steps: |

This process can be divided into two steps: |

||

<pre> |

<pre> |

||

$ icc -c hello.c # Compile .c File into object file (ending in .o) |

|||

$ icc -c ex.c |

|||

$ icc hello.o -o hello # Link the object file with the system libc library) |

|||

$ icc ex.o -o ex |

|||

</pre> |

</pre> |

||

When using libraries you must sometimes specify |

When using libraries you must sometimes specify the directories where the |

||

* include files (option <kbd>-I</kbd>) and where the |

|||

* library files (option <kbd>-L</kbd>) are located. |

|||

In addition you have to tell the compiler which |

|||

* library you want to link to (option <kbd>-l</kbd>). |

|||

For example after <kbd>module load numlib/fftw</kbd> you can compile code for fftw using |

|||

<pre> |

|||

$ icc -c hello.c -I$FFTW_INC_DIR |

|||

$ icc hello.o -o hello -L$FFTW_LIB_DIR -lfftw3 |

|||

</pre> |

|||

When the program crashes or doesn't produce the expected output the compiler can |

|||

help you by printing all warning messages <kbd>-Wall</kbd> and adding flags for debugging <kbd>-g</kbd>: |

|||

<pre>$ icc -Wall -g hello.c -o hello</pre> |

|||

== Debugger == |

|||

If the problem can't be solved this way you can inspect what exactly your program |

|||

does using a debugger, e.g. [[Development/GDB|GDB]]. |

|||

<font color=green>To use the debugger properly with your program you have to compile it with debug information (option <kbd>-g</kbd>)</font>: |

|||

<u>Example</u> |

|||

<pre>$ icc -g hello.c -o hello</pre> |

|||

Although the compiler option <kbd>-Wall</kbd> (and possibly others) should always be set, the <kbd>-g</kbd> option should only be passed for |

|||

debugging purposes to find bugs. |

|||

It may slow down execution and enlarges the binary due to debugging symbols. |

|||

== Optimization == |

|||

The usual and common way to compile your source is to apply compiler optimization. |

|||

Since there are many optimization options, as a start for now the <font color=green>optimization level -O2</font> is recommended: |

|||

<pre>$ icc -O2 hello.c -o hello</pre> |

|||

<font color=red>Beware:</font> The optimization-flag used is a capital-O (like Otto) and not a 0 (Zero)! |

|||

All compilers offer a multitude of optimization options, |

|||

one may check the complete list of options with short explanation on [[Development/GCC|GCC]], [[LLVM|LLVM]] and |

|||

[[Development/Intel_Compiler|Intel Suite]] using option '''-v''' '''--help''': |

|||

<pre> |

|||

$ icc -v --help | less |

|||

$ gcc -v --help | less |

|||

$ clang -v --help | less |

|||

</pre> |

|||

Please note, that the optimization level <kbd>-O2</kbd> produces code for a general instruction set. |

|||

If you want to set the instruction set available, and take advantage of AVX2 or AVX512f/AVX10.2, you have to |

|||

either add the machine-dependent <kbd>-mavx512f</kbd> or set the specific architecture of your |

|||

target processor. |

|||

For [[BwUniCluster_2.0]] this depends on whether you run your application on any node, then you would select |

|||

the older Broadwell CPU, or whether You target the newer HPC nodes (which feature Xeon Gold 6230, aka "Cascade Lake" |

|||

architecture). |

|||

<pre> |

|||

$ gcc -O2 -o hello hello.c # General optimization for any architecture |

|||

$ gcc -O2 -march=broadwell -o hello hello.c # Will work on any compute node on bwUniCluster 2.0 |

|||

$ gcc -O2 -march=cascadelake -o hello hello.c # This may not run on Broadwell nodes |

|||

</pre> |

|||

While adding <kbd>-march=broadwell</kbd> adds the compiler options such as <kbd>-mavx -mavx2 -msse3 -msse4 -msse4.1 -msse4.2 -mssse3</kbd>, |

|||

adding <kbd>-march=cascadelake</kbd> will further this by <kbd>-mavx512bw -mavx512cd -mavx512dq -mavx512f -mavx512vl -mavx512vnni -mfma</kbd>, |

|||

where <kbd>-mfma</kbd> is the setting for allowing fused-multiply-add. |

|||

These options may provide considerable speed-up to your code as is. |

|||

'''Please note''' however, that Cascade Lake may throttle the processor's clock speed, when executing AVX-512 instructions, possibly running slower than |

|||

(older) AVX2 code paths would have. |

|||

You should then pay attention to vectorization attained by the compiler -- and concentrate on the time-consuming loops, |

|||

where the compiler was not able to vectorize. |

|||

Further vectorization as described in the Best Practice Guides may help. |

|||

This information is available with the Intel compiler using <kbd>-qopt-report=5</kbd> producing a lot of output in <kbd>hello.optrpt</kbd>, |

|||

while GCC offers this information using <kbd>-fopt-info-all</kbd> |

|||

For GCC the options in use are best visible by calling <kbd>gcc -O2 -fverbose-asm -S -o hello.S hello.c</kbd>. |

|||

The option <kbd>-fverbose-asm</kbd> stores all the options in the assembler file <kbd>hello.S</kbd>. |

|||

== Warnings and Error detection == |

|||

All compilers have improved tremendously with regards to analyzing and detecting suspicious code: do make '''use''' of such warnings and hints. |

|||

The amount of false positives has reduced and it will make your code more accessible, less error-prone and more portable. |

|||

The typical warning flags are <kbd>-Wall</kbd> to turn on ''all'' warnings. |

|||

However, there's multiple other worthwhile warnings, which are not covered (since they might increase false positives, or since they are not yet considered so prominent). |

|||

E.g. <kbd>-Wextra</kbd> turns on several other warnings, which will in the above example show that neither <kbd>argc</kbd> nor <kbd>argv</kbd> have been used inside of <kbd>main</kbd>. |

|||

For LLVM's <kbd>clang</kbd> the flag <kbd>-Weverything</kbd> turns on all available warnings, albeit leading to many warnings (even false positives) on larger projects. |

|||

However, the fix-it hints are very helpful as well. |

|||

All the compilers offer the flag <kbd>-Werror</kbd> which turns any warning (allowing completion of compilation) into hard errors. |

|||

<br> |

|||

<br> |

|||

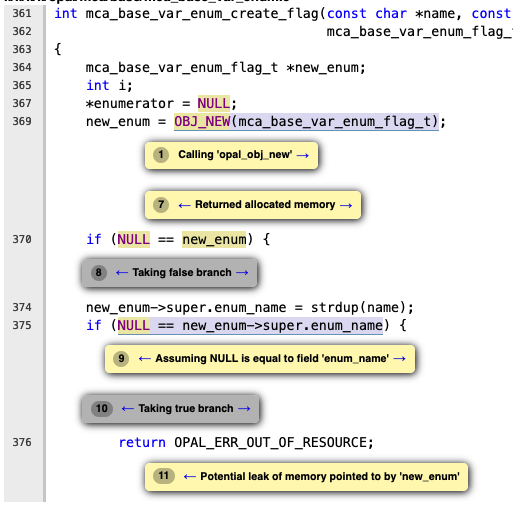

[[File:static_code_analysis.png|right|border|513px|Copyright: HS Esslingen)]] |

|||

Another powerful feature available in GNU- and LLVM-based compilers is '''static code analysis''', otherwise only available in Commercial tools, like [https://www.synopsys.com/software-integrity/security-testing/static-analysis-sast.html Coverity]. |

|||

Static code analysis evaluates '''each''' and '''every''' code path, making assumptions on input values and branches taken, detecting corner cases which might lead to real errors -- without having to actually execute this code path. |

|||

For GCC this is turned on using <kbd>-fanalyzer</kbd> which will detect e.g. cases of memory usage after a <kbd>free()</kbd> of said memory and many others. [https://gcc.gnu.org/onlinedocs/gcc/Static-Analyzer-Options.html#Static-Analyzer-Options GCC's documentation] on Static Analysis provides further details. |

|||

For LLVM recompile your project using <kbd>scan-build</kbd>, e.g.: |

|||

<pre> |

<pre> |

||

$ scan-build make |

|||

$ icc -c ex.c -I$FFTW_INC_DIR |

|||

$ icc ex.o -o ex -L$FFTW_LIB_DIR -lfftw3 |

|||

</pre> |

</pre> |

||

This produces warnings on <kbd>stdout</kbd>, but more importantly scan reports in directory <kbd>/scratch/scan-build-XXX</kbd>, where XXX is date and time of the build. |

|||

When the program crashes or doesn't produce the expected output the compiler can help you by printing warning messages: |

|||

For example the output of Open MPI includes real issues of missed memory releases in error code paths -- as shown in the following picture. |

|||

<pre>$ icc -Wall ex.c -o ex</pre> |

|||

If the problem can't be solved this way you can inspect what exactly your program does using a debugger. To use the debugger properly with your program you have to compile it with debug information (option -g): |

|||

<pre>$ icc -g ex.c -o ex</pre> |

|||

Although -Wall should always be set, the -g option should only be stated when you want to find bugs because it slows down the program and makes it larger. |

|||

[[Category:Compiler_software]] |

|||

Latest revision as of 09:46, 14 April 2025

| Description | Content |

|---|---|

| module load | compiler/gnu or compiler/intel or compiler/llvm and others... |

| License | Intel: Commercial | GNU: GPL | LLVM: Apache 2 | PGI/NVIDIA: Commercial |

Description

Basically, compilers translate human-readable source code (e.g. C++ interpreted as adhering to ISO/IEC 14882:2014, encoded in UTF-8 text) into binary byte code (e.g. x86-64 with Linux ABI in ELF-format). Compilers are complex software and have become very powerful in the last decades, to guide you as a programmer writing better, more portable, more performant programs. Use the compiler as a tool -- and best use multiple compilers on the same source code for best results. The basic operations and hints can be performed with the same or similar commands on all available compilers. For advanced usage such as optimization and profiling you should consult the best practice guide of the compiler you intend to use (GCC, Intel Suite).

More information about the MPI versions of the GNU and Intel Compilers is available here:

Loading compilers as modules

Modules and loading of modules is described in general in here for traditional Environment Modules and about the Lmod implementation of Environment modules in here for Lmod.

Modules need to be mentioned, since on any system there's a pre-installed set of compilers (for C, C++ and usually Fortran) -- the so-called system compilers -- provided by the Linux distribution. The system compiler however may lack certain options for optimization, are typically optimizing for older and more architectures (think SSE vs. AVX2 and AVX-512/AVX10.2). Also they may lack useful warnings or other features. On RedHat Enterprise Linux 9.4 this is GNU compiler v11.4.1. Be advised to check out the newer compilers available as modules.

Since Fortran (and very old C++) requires compiling and linking libraries with the very same compiler, many libraries, first-and-foremost the MPI libraries need to be provided for specific versions of a compiler. On some clusters, these provided libraries will only be visible to module avail, once a compiler is loaded. Hence, check out loading

$ module avail compiler/intel ... $ module load compiler/intel/2023.1.0 ... $ module avail

to see the available MPI modules.

All vendors whether it's Intel, GNU, LLVM or the Nvidia toolkit (see module group toolkit) have compilers for different programming languages which will be available only after loading the module.

Linux Default Compiler

The default Compiler installed on all compute nodes is the GNU Compiler Collection (GCC) or in short GNU compiler.

- Don't get distracted with the available compiler modules.

- Only the modules are loading the complete environments needed.

Example

$ module purge # unload all modules $ module list # check which modules are loaded, we expect none No Modulefiles Currently Loaded. $ gcc --version # see version of default Linux GNU compiler gcc (GCC) 8.5.0 20210514 (Red Hat 8.5.0-18) [...] $ module load compiler/gnu # load default GNU compiler module $ module list # check which modules are loaded, we expect the default GNU compiler Currently Loaded Modulefiles: 1) compiler/gnu/13.3 $ gcc --version # now, check the current (loaded) version of the GNU C compiler gcc (GCC) 13.3.0 [...]

Synoptical Tables

Compilers (no MPI)

| Compiler Suite | Language | Command |

|---|---|---|

| Intel Composer (pre-OneAPI) • Best Practice Guides on Intel Compiler Software |

C | icc |

| C++ | icpc | |

| Fortran | ifort | |

| Intel OneAPI (llvm-based) • Best Practice Guides on Intel Compiler Software |

C | icx |

| C++ | icpx | |

| Fortran | ifx | |

| GCC • Best Practice Guides on GNU Compiler Software |

C | gcc |

| C++ | g++ | |

| Fortran | gfortran | |

| LLVM | C | clang |

| C++ | clang++ | |

| Fortran 77/90 | flang | |

| PGI/NVIDIA | C | pgcc |

| C++ | pgCC | |

| Fortran 77/90 | pgf77 or pgf90 |

MPI compiler and Underlying Compilers

MPI implementations such as MPIch, Intel-MPI (derived from MPIch) or Open MPI provide compiler wrappers, easing the usage of MPI by providing the Include-Directory -I and required libraries as well as the implementation's library directory flag -L for linking. The following table lists available MPI compiler commands and the underlying compilers, compiler families, languages, and application binary interfaces (ABIs) that they support.

| MPI Compiler Command | Default Compiler | Supported Language(s) | Supported ABI's |

|---|---|---|---|

| Generic Compilers | |||

| mpicc | gcc, cc | C | 32/64 bit |

| mpicxx | g++ | C/C++ | 32/64 bit |

| mpifc | gfortran | Fortran77/Fortran 95 | 32/64 bit |

| GNU Compiler Versions 3 and higher | |||

| mpigcc | gcc | C | 32/64 bit |

| mpigxx | g++ | C/C++ | 32/64 bit |

| mpif77 | g77 | Fortran 77 | 32/64 bit |

| mpif90 | gfortran | Fortran 95 | 32/64 bit |

| Intel Fortran, C++ Compilers Versions 13.1 through 14.0 and Higher | |||

| mpiicc | icc | C | 32/64 bit |

| mpiicpc | icpc | C++ | 32/64 bit |

| impiifort | ifort | Fortran77/Fortran 95 | 32/64 bit |

How to use

The following compiler commands work for all the compilers in the list above even though the examples will be for icc only.

Commands

The typical introduction is a "Hello World" program. The following C source code shows best practices:

#include <stdio.h> // for printf

#include <stdlib.h> // for EXIT_SUCCESS and EXIT_FAILURE

int main (int argc, char * argv[]) { // std. definition of a program taking arguments

printf("Hello World\n"); // Unix Output is line-buffered, end line with New-line.

return EXIT_SUCCESS; // End program by returning 0 (No Error)

}

After loading the Intel Compiler module using module load compiler/intel/, the source may be compiled and linked with the single command

$ icc hello.c -o hello

to produce an executable named hello. This may be executed using the command ./hello.

This process can be divided into two steps:

$ icc -c hello.c # Compile .c File into object file (ending in .o) $ icc hello.o -o hello # Link the object file with the system libc library)

When using libraries you must sometimes specify the directories where the

- include files (option -I) and where the

- library files (option -L) are located.

In addition you have to tell the compiler which

- library you want to link to (option -l).

For example after module load numlib/fftw you can compile code for fftw using

$ icc -c hello.c -I$FFTW_INC_DIR $ icc hello.o -o hello -L$FFTW_LIB_DIR -lfftw3

When the program crashes or doesn't produce the expected output the compiler can help you by printing all warning messages -Wall and adding flags for debugging -g:

$ icc -Wall -g hello.c -o hello

Debugger

If the problem can't be solved this way you can inspect what exactly your program does using a debugger, e.g. GDB.

To use the debugger properly with your program you have to compile it with debug information (option -g):

Example

$ icc -g hello.c -o hello

Although the compiler option -Wall (and possibly others) should always be set, the -g option should only be passed for debugging purposes to find bugs. It may slow down execution and enlarges the binary due to debugging symbols.

Optimization

The usual and common way to compile your source is to apply compiler optimization.

Since there are many optimization options, as a start for now the optimization level -O2 is recommended:

$ icc -O2 hello.c -o hello

Beware: The optimization-flag used is a capital-O (like Otto) and not a 0 (Zero)!

All compilers offer a multitude of optimization options,

one may check the complete list of options with short explanation on GCC, LLVM and

Intel Suite using option -v --help:

$ icc -v --help | less $ gcc -v --help | less $ clang -v --help | less

Please note, that the optimization level -O2 produces code for a general instruction set. If you want to set the instruction set available, and take advantage of AVX2 or AVX512f/AVX10.2, you have to either add the machine-dependent -mavx512f or set the specific architecture of your target processor. For BwUniCluster_2.0 this depends on whether you run your application on any node, then you would select the older Broadwell CPU, or whether You target the newer HPC nodes (which feature Xeon Gold 6230, aka "Cascade Lake" architecture).

$ gcc -O2 -o hello hello.c # General optimization for any architecture $ gcc -O2 -march=broadwell -o hello hello.c # Will work on any compute node on bwUniCluster 2.0 $ gcc -O2 -march=cascadelake -o hello hello.c # This may not run on Broadwell nodes

While adding -march=broadwell adds the compiler options such as -mavx -mavx2 -msse3 -msse4 -msse4.1 -msse4.2 -mssse3, adding -march=cascadelake will further this by -mavx512bw -mavx512cd -mavx512dq -mavx512f -mavx512vl -mavx512vnni -mfma, where -mfma is the setting for allowing fused-multiply-add. These options may provide considerable speed-up to your code as is. Please note however, that Cascade Lake may throttle the processor's clock speed, when executing AVX-512 instructions, possibly running slower than (older) AVX2 code paths would have.

You should then pay attention to vectorization attained by the compiler -- and concentrate on the time-consuming loops, where the compiler was not able to vectorize. Further vectorization as described in the Best Practice Guides may help. This information is available with the Intel compiler using -qopt-report=5 producing a lot of output in hello.optrpt, while GCC offers this information using -fopt-info-all

For GCC the options in use are best visible by calling gcc -O2 -fverbose-asm -S -o hello.S hello.c. The option -fverbose-asm stores all the options in the assembler file hello.S.

Warnings and Error detection

All compilers have improved tremendously with regards to analyzing and detecting suspicious code: do make use of such warnings and hints. The amount of false positives has reduced and it will make your code more accessible, less error-prone and more portable.

The typical warning flags are -Wall to turn on all warnings. However, there's multiple other worthwhile warnings, which are not covered (since they might increase false positives, or since they are not yet considered so prominent). E.g. -Wextra turns on several other warnings, which will in the above example show that neither argc nor argv have been used inside of main.

For LLVM's clang the flag -Weverything turns on all available warnings, albeit leading to many warnings (even false positives) on larger projects. However, the fix-it hints are very helpful as well.

All the compilers offer the flag -Werror which turns any warning (allowing completion of compilation) into hard errors.

Another powerful feature available in GNU- and LLVM-based compilers is static code analysis, otherwise only available in Commercial tools, like Coverity. Static code analysis evaluates each and every code path, making assumptions on input values and branches taken, detecting corner cases which might lead to real errors -- without having to actually execute this code path.

For GCC this is turned on using -fanalyzer which will detect e.g. cases of memory usage after a free() of said memory and many others. GCC's documentation on Static Analysis provides further details.

For LLVM recompile your project using scan-build, e.g.:

$ scan-build make

This produces warnings on stdout, but more importantly scan reports in directory /scratch/scan-build-XXX, where XXX is date and time of the build. For example the output of Open MPI includes real issues of missed memory releases in error code paths -- as shown in the following picture.