Scaling: Difference between revisions

| Line 33: | Line 33: | ||

In extreme cases, when the problem is very hard to divide, using more compute cores, can even make the job finish later. |

In extreme cases, when the problem is very hard to divide, using more compute cores, can even make the job finish later. |

||

For real calculations, it is often impractical to wait for calculations to finish if they are done on a single core. |

For real calculations, it is often impractical to wait for calculations to finish if they are done on a single core. |

||

Typical calculation times for a job should stay under 2 days, or up to 2 weeks for jobs that cannot use more cores efficiently. Any longer and the risks such as node failures, cluster downtimes due to maintenance, and getting (possibly wrong) results after too much wait time can become too much of a problem. |

|||

How do you decide on how many cores to run a single problem? |

How do you decide on how many cores to run a single problem? |

||

| Line 41: | Line 41: | ||

Calculating on 1 core would take 1000 hours. Without any overhead from parallelization, the same calculation run on 96 cores would need |

Calculating on 1 core would take 1000 hours. Without any overhead from parallelization, the same calculation run on 96 cores would need |

||

1000/96= ~10 hours |

1000/96= ~10 hours. |

||

More realistically, such a calculation would need ~30 hours. |

More realistically, such a calculation would need ~30 hours. |

||

| Line 48: | Line 47: | ||

We can define a speedup S: |

We can define a speedup S: |

||

S(N) = \frac{T(1)}/{T(N)}</math> <math>\sqrt{2} (perfect scaling: S(N)=N) |

|||

(perfect scaling: S(N)=N) |

|||

T(x): time to compute your problem |

T(x): time to compute your problem |

||

N: number of cores the calculation is done on |

N: number of cores the calculation is done on |

||

Revision as of 16:19, 8 May 2023

Introduction

Before you submit large production runs on a bwHPC cluster you should define an optimal number of resources required for your compute job. Poor job efficiency means that hardware resources are wasted and a similar overall result could have been achieved using fewer hardware resources, leaving those for other jobs and reducing the queue wait time for all users.

The main advantage of today‘s compute clusters is that they are able to perform calculations in parallel. If and how your code is able to be parallelized is of fundamental importance for achieving good job efficiency and performance on an HPC cluster. A scaling analysis is done by identifying the number of resources (such as the number of cores, nodes, or GPUs) that enable the best performance for a given compute job.

Reasons for poor job efficiency

Some simple causes for poor overall job efficiency are:

- Poor choice of resources compared to the size of the nodes leaves part of the node blocked, but doing nothing:

- Multiple of --ntasks-per-node is not the number of cores on a node (e.g. 48)

- Too much (un-needed) memory or disk space requested

- More cores requested than are actually used by the job

- More cores used for a single mpi/openmp parallel computation than useful

- Many small jobs with a short runtime (seconds in extreme cases)

- One-core jobs with very different run-times (because of single-user policy)

Time to Finish: Considering Resources vs. Queue Time

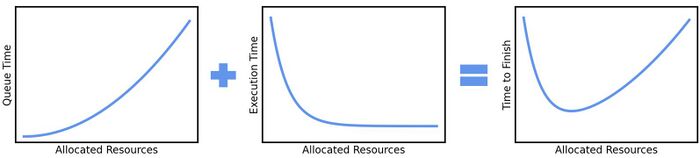

When a job is submitted to the Slurm scheduler, the job first waits in the queue before being executed on the compute nodes. The amount of time spent in the queue is called the queue time. The amount of time it takes for the job to run on the compute nodes is called the execution time.

The figure below shows that the queue time increases with increasing resources (e.g., CPU cores) while the execution time decreases with increasing resources. One should try to find the optimal set of resources that minimizes the "time to solution" which is the sum of the queue and execution times. A simple rule is to choose the smallest set of resources that gives a reasonable speed-up over the baseline case.

Scalability

When you run a parallel program, the problem has to be cut into several independent pieces. For some problems, this is easier than for others - but in every case, this produces an overhead of time used to divide the problem, distribute parts of it to tasks, and stitch the results together.

For a theoretical amount of "infinite calculations", calculating each problem on one single core would be the most efficient way to use the hardware In extreme cases, when the problem is very hard to divide, using more compute cores, can even make the job finish later.

For real calculations, it is often impractical to wait for calculations to finish if they are done on a single core. Typical calculation times for a job should stay under 2 days, or up to 2 weeks for jobs that cannot use more cores efficiently. Any longer and the risks such as node failures, cluster downtimes due to maintenance, and getting (possibly wrong) results after too much wait time can become too much of a problem. How do you decide on how many cores to run a single problem?

For this, we want to compare the time needed to solve the problem on 1 core with the time needed on N cores. Example:

Calculating on 1 core would take 1000 hours. Without any overhead from parallelization, the same calculation run on 96 cores would need 1000/96= ~10 hours. More realistically, such a calculation would need ~30 hours.

We can define a speedup S:

S(N) = \frac{T(1)}/{T(N)}</math> <math>\sqrt{2} (perfect scaling: S(N)=N)

T(x): time to compute your problem N: number of cores the calculation is done on

and an efficiency E:

E(N) = S(N) / N (ideally, this would = 1)

or combined

E(N) = T(1)/(T(N)*N)

E is the percentage (0.00-1.00) of cores that actually contribute to the speedup. The other cores are busy doing overhead calculations needed to run the problem in parallel.

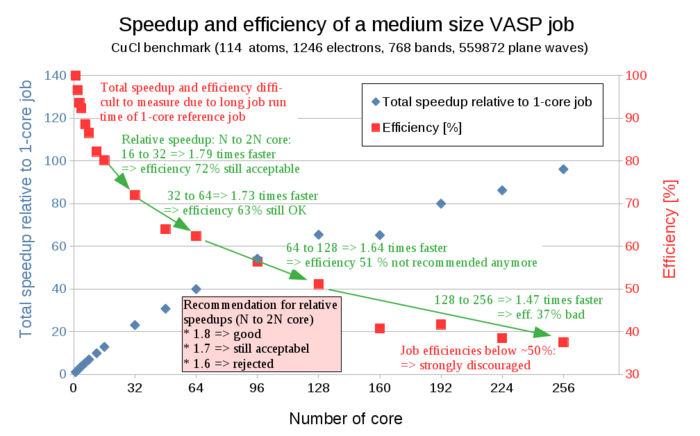

In this example, we have E(N) = 1000/(30*96)=0.35 So 35% of cores are used to solve your problem, and 65% of the cores are used to calculate how to parallelize the problem. As a semi-arbitrary cut-off, we can define that jobs are scaled well if they waste 50% of the computation or less on parallelization overhead. As we can see in the example, calculating T(1) would take 42 days and is hence not practical. How do we determine how many cores to use, if running the calculation on one core takes "forever"? As we cannot compare with T(1), all we can do is compare the relative speedup R(N1,2*N1), that happens on doubling the number of cores used: for example: R(4,8)= T(4)/T(8).

As a rough guideline, an R of 1.8 for doubling the number of cores is good, an R of 1.7 is still acceptable. Avoid R below 1.7 if you have many jobs to run. Overall, this leads us to the following rules of thumb or recipe for determining the number of cores to use:

(1) Optimizing resource usage is most relevant when you submit many jobs. If you submit only one or two jobs, simply try to use a reasonable core number and you are done.

(2) If you plan to submit many jobs, verify that the core number is acceptable. If the jobs use N cores (i.e. N is 96 for a two-node job), then run the same job with N/2 cores (in this example 48 cores).

(3) To calculate the speedup, you then divide the (longer) run time of the N/2-core-job by the (shorter) run time of the N-core-job. Typically the speedup is a number between 1.0 (no speedup at all) and 2.0 (perfect speedup - all additional cores speed up the job).

(4a) IF the speedup is better than a factor of 1.7, THEN using N cores is perfectly fine.

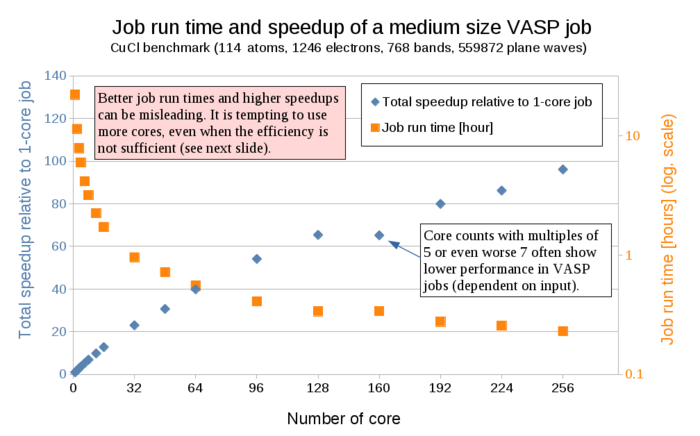

(4b) IF the speedup is worse than a factor of 1.7, THEN using N cores wastes too many resources and N/2 cores should be used. You can see data from a real example using the program VASP in the following