Difference between revisions of "NEMO/Hardware"

m |

m (Update Storage + Network) |

||

| Line 33: | Line 33: | ||

| − | === |

+ | === Compute Nodes === |

For researchers from the scientific fields '''N'''euroscience, '''E'''lementary Particle Physics and '''M'''icrosystems Engineering the bwFor Cluster '''NEMO''' offers 748 compute nodes plus several special purpose nodes for login, interactive jobs, etc. |

For researchers from the scientific fields '''N'''euroscience, '''E'''lementary Particle Physics and '''M'''icrosystems Engineering the bwFor Cluster '''NEMO''' offers 748 compute nodes plus several special purpose nodes for login, interactive jobs, etc. |

||

| Line 40: | Line 40: | ||

{| class="wikitable" |

{| class="wikitable" |

||

|- |

|- |

||

| − | ! style="width: |

+ | ! style="width:10%"| |

| − | ! style="width: |

+ | ! style="width:10%"| Compute Node |

| − | ! style="width: |

+ | ! style="width:10%"| Coprocessor (MIC) |

|- |

|- |

||

!scope="column"| Quantity |

!scope="column"| Quantity |

||

| Line 74: | Line 74: | ||

|} |

|} |

||

| + | === Special Purpose Nodes === |

||

| + | |||

| + | Besides the classical compute node several nodes serve as login and preprocessing nodes, nodes for interactive jobs and nodes for creating virtual environments providing a virtual service environment. |

||

[[Category:bwForCluster NEMO]] |

[[Category:bwForCluster NEMO]] |

||

[[Category:Hardware and Architecture|bwForCluster NEMO]] |

[[Category:Hardware and Architecture|bwForCluster NEMO]] |

||

| + | |||

| + | |||

| + | == Storage Architecture == |

||

| + | |||

| + | The bwForCluster [https://nemo.uni-freiburg.de NEMO] consists of two separate storage systems, one for the user's home directory <tt>$HOME</tt> and one serving as a work space. The home directory is limited in space and parallel access but offers snapshots of your files and Backup. The work space is a parallel file system which offers fast and parallel file access and a bigger capacity than the home directory. This storage is based on [http://www.beegfs.com/ BeeGFS] and can be accessed parallel from many nodes. Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the <tt>$TMPDIR</tt> environment variable. |

||

| + | |||

| + | {| class="wikitable" |

||

| + | |- |

||

| + | ! style="width:10%"| |

||

| + | ! style="width:10%"| <tt>$HOME</tt> |

||

| + | ! style="width:10%"| Work Space |

||

| + | ! style="width:10%"| <tt>$TMPDIR</tt> |

||

| + | |- |

||

| + | !scope="column" | Visibility |

||

| + | | global ([https://en.wikipedia.org/wiki/Gigabit_Ethernet GbE]) |

||

| + | | global ([http://www.intel.com/content/www/us/en/high-performance-computing-fabrics/omni-path-architecture-fabric-overview.html Omni-Path]) |

||

| + | | node local |

||

| + | |- |

||

| + | !scope="column" | Lifetime |

||

| + | | permanent |

||

| + | | work space lifetime (max. 100 days, with extensions up to 400) |

||

| + | | batch job walltime |

||

| + | |- |

||

| + | !scope="column" | Capacity |

||

| + | | 50 TB |

||

| + | | 576 TB |

||

| + | | 220 GB per node |

||

| + | |- |

||

| + | !scope="column" | [https://en.wikipedia.org/wiki/Disk_quota#Quotas Quotas] |

||

| + | | 100 GB per user |

||

| + | | none |

||

| + | | none |

||

| + | |- |

||

| + | !scope="column" | Backup |

||

| + | | [http://www.emc.com/collateral/software/data-sheet/h10795-isilon-snapshotiq-ds.pdf snapshots] + [https://en.wikipedia.org/wiki/Tape_drive tape backup] |

||

| + | | no |

||

| + | | no |

||

| + | |} |

||

| + | |||

| + | global : all nodes access the same file system |

||

| + | local : each node has its own file system |

||

| + | permanent : files are stored permanently |

||

| + | batch job walltime : files are removed at end of the batch job |

||

| + | |||

| + | |||

| + | === $HOME === |

||

| + | |||

| + | Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. The files in $HOME are stored on a [https://www.emc.com/en-us/storage/isilon/onefs-operating-system.htm Isilon OneFS] and provided via [https://en.wikipedia.org/wiki/Network_File_System NFS] to all nodes. |

||

| + | |||

| + | <!-- |

||

| + | Current disk usage on home directory and quota status can be checked with the '''diskusage''' command: |

||

| + | |||

| + | $ diskusage |

||

| + | |||

| + | User Used (GB) Quota (GB) Used (%) |

||

| + | ------------------------------------------------------------------------ |

||

| + | <username> 4.38 100.00 4.38 |

||

| + | |||

| + | --> |

||

| + | |||

| + | |||

| + | NOTE: |

||

| + | Compute jobs on nodes must not write temporary data to $HOME. |

||

| + | Instead they should use the local $TMPDIR directory for I/O-heavy use cases |

||

| + | and work spaces for less I/O intense multinode-jobs. |

||

| + | |||

| + | |||

| + | <!-- |

||

| + | '''Quota is full - what to do''' |

||

| + | |||

| + | In case of 100% usage of the quota user can get some problems with disk writing operations (e.g. error messages during the file copy/edit/save operations). To avoid it - please remove some data that you don't need from the $HOME directory or move it to some temporary place. |

||

| + | |||

| + | As temporary place for the data user can use: |

||

| + | |||

| + | * '''Workspace''' - space on the Lustre file system, lifetime up to 90 days (see below) |

||

| + | |||

| + | * '''Scratch on login nodes''' - special directory on every login node (login01..login04): |

||

| + | ** Access via variable $TMPDIR (e.g. "cd $TMPDIR") |

||

| + | ** Lifetime of data - minimum 7 days (based on the last access time) |

||

| + | ** Data is private for every user |

||

| + | ** Each login node has own scratch directory (data is NOT shared) |

||

| + | ** There is NO backup of the data |

||

| + | |||

| + | To get optimal and comfortable work with the $HOME directory is important to keep the data in order (remove unnecessary and temporary data, archive big files, save large files only on the workspace). To optimise data-usage workflow user can always get help from the JUSTUS support team. (Link to the contact and support page) |

||

| + | --> |

||

| + | |||

| + | |||

| + | === Work Space === |

||

| + | |||

| + | Work spaces can be generated through the <tt>workspace</tt> tools. This will generate a directory on the parallel storage with a limited lifetime. When this lifetime is reached the work space will be deleted automatically after a grace period. Work spaces can be extended to prevent deletion. You can create reminders and calendar entries to prevent accidental removal. |

||

| + | |||

| + | To create a work space you'll need to supply a name for your work space area and a lifetime in days. |

||

| + | For more information read the corresponding help, e.g: <tt>ws_allocate -h</tt>. |

||

| + | |||

| + | Defaults and maximum values: |

||

| + | {| class="wikitable" |

||

| + | |- |

||

| + | | Default and maximum lifetime (days) |

||

| + | | 100 |

||

| + | |- |

||

| + | | Maximum extensions |

||

| + | | 3 |

||

| + | |- |

||

| + | |} |

||

| + | |||

| + | Examples: |

||

| + | {| class="wikitable" |

||

| + | |- |

||

| + | !style="width:30%" | Command |

||

| + | !style="width:70%" | Action |

||

| + | |- |

||

| + | |<tt>ws_allocate mywork 30</tt> |

||

| + | |Allocate a work space named "mywork" for 30 days. |

||

| + | |- |

||

| + | |<tt>ws_allocate myotherwork</tt> |

||

| + | |Allocate a work space named "myotherwork" with maximum lifetime. |

||

| + | |- |

||

| + | |<tt>ws_list -a</tt> |

||

| + | |List all your work spaces. |

||

| + | |- |

||

| + | |<tt>ws_find mywork</tt> |

||

| + | |Get absolute path of work space "mywork". |

||

| + | |- |

||

| + | |<tt>ws_extend mywork 30</tt> |

||

| + | |Extend life me of work space mywork by 30 days from now. |

||

| + | |- |

||

| + | |<tt>ws_release mywork</tt> |

||

| + | |Manually erase your work space "mywork". Please remove directory content first. |

||

| + | |- |

||

| + | |} |

||

| + | |||

| + | |||

| + | === Local Disk Space === |

||

| + | |||

| + | All compute nodes are equipped with a local SSD with 240 GB capacity. During computation the environment variable <tt>$TMPDIR</tt> points to this local disk space. The data will become unavailable as soon as the job has finished. |

||

| + | |||

| + | |||

| + | == High Performance Network == |

||

| + | |||

| + | The compute nodes all are interconnected through the high performance network Omni-Path which offers a very small latency and 100 Gbit/s throughput. The parallel storage for the work spaces is attached via Omni-Path to all cluster nodes. For non-blocking communication 17 islands with 44 nodes and 880 cores each are available. The islands are connected with a blocking factor of 1:11 (or 400 Gbit/s for 44 nodes). |

||

| + | |||

| + | [[Category:BwForCluster NEMO]] |

||

| + | [[Category:Hardware and Architecture|bwForCluster NEMO]] |

||

| + | [[Category:bwForCluster NEMO|File System]] |

||

Revision as of 17:44, 12 July 2016

Contents

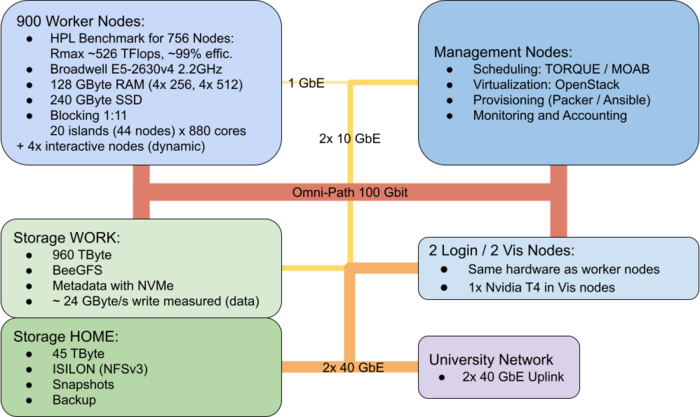

1 System Architecture

The bwForCluster NEMO is a high-performance compute resource with high speed interconnect. It is intended for compute activities related to research in for researchers from the fields Neuroscience, Elementary Particle Physics and Microsystems Engineering (NEMO).

|

Figure: bwForCluster NEMO Schematic |

1.1 Operating System and Software

- Operating System: CentOS Linux 7 (similar to RHEL 7)

- Queuing System: MOAB / Torque (see Batch Jobs for help)

- (Scientific) Libraries and Software: Environment Modules

1.2 Compute Nodes

For researchers from the scientific fields Neuroscience, Elementary Particle Physics and Microsystems Engineering the bwFor Cluster NEMO offers 748 compute nodes plus several special purpose nodes for login, interactive jobs, etc.

Compute node specification:

| Compute Node | Coprocessor (MIC) | |

|---|---|---|

| Quantity | 548 | 4 |

| Processors | 2 x Intel Xeon E5-2630v4 (Broadwell) | 1 x Intel Xeon Phi 7210 Knights Landing (KNL) |

| Processor Frequency (GHz) | 2,2 | 1,3 |

| Number of Cores | 20 | 64 |

| Working Memory DDR4 (GB) | 128 | 16 GB MCDRAM + 96 GB DDR4 |

| Local Disk (GB) | 240 (SSD) | 240 (SSD) |

| Interconnect | Omni-Path 100 | Omni-Path 100 |

1.3 Special Purpose Nodes

Besides the classical compute node several nodes serve as login and preprocessing nodes, nodes for interactive jobs and nodes for creating virtual environments providing a virtual service environment.

2 Storage Architecture

The bwForCluster NEMO consists of two separate storage systems, one for the user's home directory $HOME and one serving as a work space. The home directory is limited in space and parallel access but offers snapshots of your files and Backup. The work space is a parallel file system which offers fast and parallel file access and a bigger capacity than the home directory. This storage is based on BeeGFS and can be accessed parallel from many nodes. Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the $TMPDIR environment variable.

| $HOME | Work Space | $TMPDIR | |

|---|---|---|---|

| Visibility | global (GbE) | global (Omni-Path) | node local |

| Lifetime | permanent | work space lifetime (max. 100 days, with extensions up to 400) | batch job walltime |

| Capacity | 50 TB | 576 TB | 220 GB per node |

| Quotas | 100 GB per user | none | none |

| Backup | snapshots + tape backup | no | no |

global : all nodes access the same file system local : each node has its own file system permanent : files are stored permanently batch job walltime : files are removed at end of the batch job

2.1 $HOME

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. The files in $HOME are stored on a Isilon OneFS and provided via NFS to all nodes.

NOTE: Compute jobs on nodes must not write temporary data to $HOME. Instead they should use the local $TMPDIR directory for I/O-heavy use cases and work spaces for less I/O intense multinode-jobs.

2.2 Work Space

Work spaces can be generated through the workspace tools. This will generate a directory on the parallel storage with a limited lifetime. When this lifetime is reached the work space will be deleted automatically after a grace period. Work spaces can be extended to prevent deletion. You can create reminders and calendar entries to prevent accidental removal.

To create a work space you'll need to supply a name for your work space area and a lifetime in days. For more information read the corresponding help, e.g: ws_allocate -h.

Defaults and maximum values:

| Default and maximum lifetime (days) | 100 |

| Maximum extensions | 3 |

Examples:

| Command | Action |

|---|---|

| ws_allocate mywork 30 | Allocate a work space named "mywork" for 30 days. |

| ws_allocate myotherwork | Allocate a work space named "myotherwork" with maximum lifetime. |

| ws_list -a | List all your work spaces. |

| ws_find mywork | Get absolute path of work space "mywork". |

| ws_extend mywork 30 | Extend life me of work space mywork by 30 days from now. |

| ws_release mywork | Manually erase your work space "mywork". Please remove directory content first. |

2.3 Local Disk Space

All compute nodes are equipped with a local SSD with 240 GB capacity. During computation the environment variable $TMPDIR points to this local disk space. The data will become unavailable as soon as the job has finished.

3 High Performance Network

The compute nodes all are interconnected through the high performance network Omni-Path which offers a very small latency and 100 Gbit/s throughput. The parallel storage for the work spaces is attached via Omni-Path to all cluster nodes. For non-blocking communication 17 islands with 44 nodes and 880 cores each are available. The islands are connected with a blocking factor of 1:11 (or 400 Gbit/s for 44 nodes).