Difference between revisions of "Sds-hd hpc access"

m (→Direct access in compute jobs) |

m (→Copying data on data mover node) |

||

| Line 21: | Line 21: | ||

Certain workflows or I/O patterns may require the transfer of data from SDS@hd to a workspace on the cluster before submitting jobs. Data transfers are possible on the data mover nodes data1 and data2: |

Certain workflows or I/O patterns may require the transfer of data from SDS@hd to a workspace on the cluster before submitting jobs. Data transfers are possible on the data mover nodes data1 and data2: |

||

| − | * Login to a data mover node with command: ssh data1 (passwordless) |

+ | * Login to a data mover node with command: "ssh data1" or "ssh data2" (passwordless) |

* Fetch a Kerberos ticket with command: kinit (use your SDS@hd service password) |

* Fetch a Kerberos ticket with command: kinit (use your SDS@hd service password) |

||

* Find your SDS@hd directory in /mnt/sds-hd/ |

* Find your SDS@hd directory in /mnt/sds-hd/ |

||

Revision as of 13:38, 16 April 2018

1 Access to SDS@hd

| SDS@hd ScientificDataStorage © University Heidelberg |

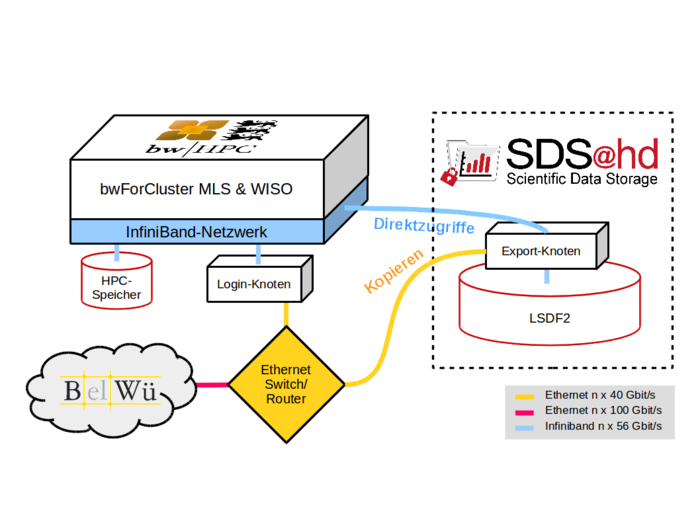

It is possible to access your storage space on SDS@hd directly on the bwforCluster MLS&WISO. You can access your SDS@hd directory with a valid Kerberos ticket on all compute nodes except standard nodes. Kerberos tickets are obtained and prolongated on the data mover nodes data1 and data2. Before a Kerberos ticket expires, notification is sent by e-mail.

1.1 Direct access in compute jobs

- Login in to a datamover, i.e. "ssh data1" or "ssh data2" (passwordless)

- Fetch a Kerberos ticket with command: kinit (use your SDS@hd service password)

- Prepare your jobscript to use the directory /mnt/sds-hd/<your-sv-acronym>

- submit your job

1.2 Copying data on data mover node

Certain workflows or I/O patterns may require the transfer of data from SDS@hd to a workspace on the cluster before submitting jobs. Data transfers are possible on the data mover nodes data1 and data2:

- Login to a data mover node with command: "ssh data1" or "ssh data2" (passwordless)

- Fetch a Kerberos ticket with command: kinit (use your SDS@hd service password)

- Find your SDS@hd directory in /mnt/sds-hd/

- Copy data between your SDS@hd directory and your workspaces

- Destroy your Kerberos ticket with command: kdestroy

- Logout from data1 for further work on the cluster