BwUniCluster3.0/Hardware and Architecture

Architecture of bwUniCluster 3.0

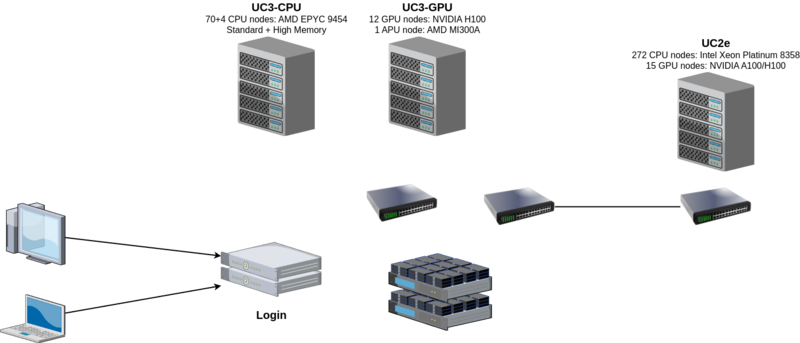

The bwUniCluster 3.0 is a parallel computer with distributed memory. It consists of the bwUniCluster 3.0 components procured in 2024 and also includes the additional compute nodes which were procured as an extension to the bwUniCluster 2.0 in 2022.

Each node of the system consists of two Intel Xeon or AMD EPYC processors, local memory, local storage, network adapters and optional accelerators (NVIDIA A100 and H100, AMD Instinct MI300A). All nodes are connected via a fast InfiniBand interconnect.

The parallel file system (Lustre) is connected to the InfiniBand switch of the compute cluster. This provides a fast and scalable parallel file system.

The operating system on each node is Red Hat Enterprise Linux (RHEL) 9.4.

The individual nodes of the system act in different roles. From an end users point of view the different groups of nodes are login nodes and compute nodes. File server nodes and administrative server nodes are not accessible by users.

Login Nodes

The login nodes are the only nodes directly accessible by end users. These nodes are used for interactive login, file management, program development, and interactive pre- and post-processing.

There are two nodes dedicated to this service, but they can all be reached from a single address: uc3.scc.kit.edu. A DNS round-robin alias distributes login sessions to the login nodes.

To prevent login nodes from being used for activities that are not permitted there and that affect the user experience of other users, long-running and/or compute-intensive tasks are periodically terminated without any prior warning. Please refer to Allowed Activities on Login Nodes.

Compute Nodes

The majority of nodes are compute nodes which are managed by a batch system. Users submit their jobs to the SLURM batch system and a job is executed when the required resources become available (depending on its fair-share priority).

File Systems

bwUniCluster 3.0 comprises two parallel file systems based on Lustre.

Compute Resources

Login nodes

After a successful login, users find themselves on one of the so called login nodes. Technically, these largely correspond to a standard CPU node, i.e. users have two AMD EPYC 9454 processors with a total of 96 cores at their disposal. Login nodes are the bridgehead for accessing computing resources. Data and software are organized here, computing jobs are initiated and managed, and computing resources allocated for interactive use can also be accessed from here.

|

Any compute intensive job running on the login nodes will be terminated without any notice. |

Compute nodes

All compute activities on bwUniCluster 3.0 have to be performed on the compute nodes. Compute nodes are only available by requesting the corresponding resources via the queuing system. As soon as the requested resources are available, automated tasks are executed via a batch script or they can be accessed interactively. Please refer to Running Jobs on how to request resources.

The following compute node types are available:

CPU nodes

- Standard: Two AMD EPYC 9454 processors per node with a total of 96 physical CPU cores or 192 logical cores (Hyper-Threading) per node. The nodes have been procured in 2024.

- Ice Lake: Two Intel Xeon Platinum 8358 processors per node with a total of 64 physical CPU cores or 128 logical cores (Hyper-Threading) per node. The nodes have been procured in 2022 as an extension to bwUniCluster 2.0.

- High Memory: Similar to the standard nodes, but with six times larger memory.

GPU nodes

- NVIDIA GPU x4: Similar to the standard nodes, but with larger memory and four NVIDIA H100 GPUs.

- AMD GPU x4: AMD's accelerated processing unit (APU) MI300A with 4 CPU sockets and 4 compute units which share the same high-bandwidth memory (HBM).

- Ice Lake NVIDIA GPU x4: Similar to the Ice Lake nodes, but with larger memory and four NVIDIA A100 or H100 GPUs.

- Cascade Lake NVIDIA GPU x4: Nodes with four NVIDIA A100 GPUs.

| Node Type | CPU nodes Ice Lake |

CPU nodes Standard |

CPU nodes High Memory |

GPU nodes NVIDIA GPU x4 |

GPU node AMD GPU x4 |

GPU nodes Ice Lake NVIDIA GPU x4 |

GPU nodes Cascade Lake NVIDIA GPU x4 |

Login nodes |

|---|---|---|---|---|---|---|---|---|

| Availability in queues | cpu_il, dev_cpu_il

|

cpu, dev_cpu

|

highmem, dev_highmem

|

gpu_h100, dev_gpu_h100

|

gpu_mi300

|

gpu_a100_il / gpu_h100_il

|

gpu_a100_short

|

- |

| Number of nodes | 272 | 70 | 4 | 12 | 1 | 15 | 19 | 2 |

| Processors | Intel Xeon Platinum 8358 | AMD EPYC 9454 | AMD EPYC 9454 | AMD EPYC 9454 | AMD Zen 4 | Intel Xeon Platinum 8358 | Intel Xeon Gold 6248R | AMD EPYC 9454 |

| Number of sockets | 2 | 2 | 2 | 2 | 4 | 2 | 2 | 2 |

| Total number of cores | 64 | 96 | 96 | 96 | 96 (4x 24) | 64 | 48 | 96 |

| Main memory | 256 GiB | 384 GiB | 2304 GiB | 768 GiB | 4x 128 GiB HBM3 | 512 GiB | 384 GiB | 384 GiB |

| Local SSD | 1.8 TB NVMe | 3.84 TB NVMe | 15.36 TB NVMe | 15.36 TB NVMe | 7.68 TB NVMe | 6.4 TB NVMe | 1.92 TB SATA SSD | 7.68 TB SATA SSD |

| Accelerators | - | - | - | 4x NVIDIA H100 | 4x AMD Instinct MI300A | 4x NVIDIA A100 / H100 | 4x NVIDIA A100 | - |

| Accelerator memory | - | - | - | 94 GiB | APU | 80 GiB / 94 GiB | 40 GiB | - |

| Interconnect | IB HDR200 | IB 2x NDR200 | IB 2x NDR200 | IB 4x NDR200 | IB 2x NDR200 | IB 2x HDR200 | IB 4x EDR | IB 1x NDR200 |

Table 1: Hardware overview and properties

File Systems

On bwUniCluster 3.0 the following file systems are available:

- $HOME

The HOME directory is created automatically after account activation, and the environment variable $HOME holds its name. HOME is the place, where users find themselves after login. - Workspaces

Users can create so-called workspaces for non-permanent data with temporary lifetime. - Workspaces on flash storage

A further workspace file system based on flash-only storage is available for special requirements and certain users. - $TMPDIR

The directory $TMPDIR is only available and visible on the local node during the runtime of a compute job. It is located on fast SSD storage devices. - BeeOND (BeeGFS On-Demand)

On request a parallel on-demand file system (BeeOND) is created which uses the SSDs of the nodes which were allocated to the batch job. - LSDF Online Storage

On request the external LSDF Online Storage is mounted on the nodes which were allocated to the batch job. On the login nodes, LSDF is automatically mounted.

Which file system to use?

You should separate your data and store it on the appropriate file system. Permanently needed data like software or important results should be stored in $HOME but capacity restrictions (quotas) apply. In case you accidentally deleted data on $HOME there is a chance that we can restore it from backup. Permanent data which is not needed for months or exceeds the capacity restrictions should be sent to the LSDF Online Storage or to the archive and deleted from the file systems. Temporary data which is only needed on a single node and which does not exceed the disk space shown in Table 1 above should be stored below $TMPDIR. Data which is read many times on a single node, e.g. if you are doing AI training, should be copied to $TMPDIR and read from there. Temporary data which is used from many nodes of your batch job and which is only needed during job runtime should be stored on a parallel on-demand file system BeeOND. Temporary data which can be recomputed or which is the result of one job and input for another job should be stored in workspaces. The lifetime of data in workspaces is limited and depends on the lifetime of the workspace which can be several months.

For further details please check: File System Details

$HOME

The $HOME directories of bwUniCluster 3.0 users are located on the parallel file system Lustre. You have access to your $HOME directory from all nodes of UC3. A regular backup of these directories to tape archive is done automatically. The directory $HOME is used to hold those files that are permanently used like source codes, configuration files, executable programs etc.

Workspaces

On UC3 workspaces should be used to store large non-permanent data sets, e.g. restart files or output data that has to be post-processed. The file system used for workspaces is also the parallel file system Lustre. This file system is especially designed for parallel access and for a high throughput to large files. It is able to provide high data transfer rates of up to 40 GB/s write and read performance when data access is parallel.

On UC3 there is a default user quota limit of 40 TiB and 20 million inodes (files and directories) per user.

Detailed information on Workspaces

Workspaces on flash storage

Another workspace file system based on flash-only storage is available for special requirements and certain users. If possible, this file system should be used from the Ice Lake nodes of bwUniCluster 3.0 (queue cpu_il). It provides high IOPS rates and better performance for small files. The quota limts are lower than on the normal workspace file system.

Detailed information on Workspaces on flash storage

$TMPDIR

The environment variable $TMPDIR contains the name of a directory which is located on the local SSD of each node. This directory should be used for temporary files being accessed from the local node. It should also be used if you read the same data many times from a single node, e.g. if you are doing AI training. Because of the extremely fast local SSD storage devices performance with small files is much better than on the parallel file systems.

Detailed information on $TMPDIR

BeeOND (BeeGFS On-Demand)

Users have the possibility to request a private BeeOND (on-demand BeeGFS) parallel filesystem for each job. The file system is created during job startup and purged when your job completes.

Detailed information on BeeOND

LSDF Online Storage

The LSDF Online Storage allows dedicated users to store scientific measurement data and simulation results. BwUniCluster 3.0 has an extremely fast network connection to the LSDF Online Storage. This file system provides external access via different protocols and is only available for certain users.