BinAC2/Hardware and Architecture: Difference between revisions

F Bartusch (talk | contribs) No edit summary |

F Bartusch (talk | contribs) No edit summary |

||

| (41 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Hardware and Architecture = |

|||

The bwForCluster BinAC 2 supports researchers from the broader fields of Bioinformatics, Astrophysics, and |

The bwForCluster BinAC 2 supports researchers from the broader fields of Bioinformatics, Medical Informatics, Astrophysics, Geosciences and Pharmacy. |

||

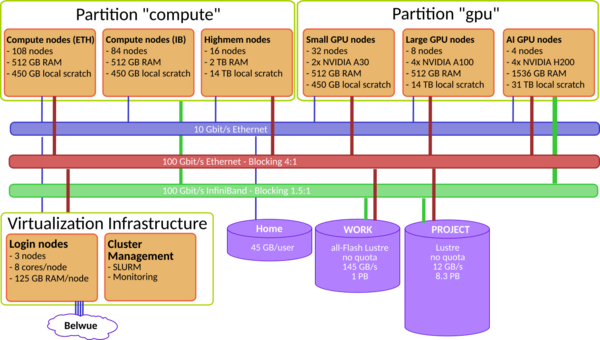

[[File:Binac2 schema.png|600px|thumb|center|Overview on the BinAC 2 hardware architecture.]] |

|||

=== Operating System and Software === |

|||

== Operating System and Software == |

|||

* Operating System: Rocky Linux 9.6 |

|||

* Queuing System: [https://slurm.schedmd.com/documentation.html Slurm] (see [[BinAC2/Slurm]] for help) |

* Queuing System: [https://slurm.schedmd.com/documentation.html Slurm] (see [[BinAC2/Slurm]] for help) |

||

* (Scientific) Libraries and Software: [[Environment Modules]] |

* (Scientific) Libraries and Software: [[Environment Modules]] |

||

== Compute Nodes == |

|||

BinAC 2 offers compute nodes, high-mem nodes, and three types of GPU nodes. |

|||

=== Compute Nodes === |

|||

* 180 compute nodes |

|||

* 16 SMP node |

|||

BinAC 2 offers compute nodes, high-mem nodes, and two types of GPU nodes. |

|||

* |

* 32 GPU nodes (2xA30) |

||

* 8 GPU nodes (4xA100) |

|||

* 14 SMP node |

|||

* |

* 4 GPU nodes (4xH200) |

||

* plus several special purpose nodes for login, interactive jobs, etc. |

|||

* 8 GPU nodes (A100) |

|||

* splus several special purpose nodes for login, interactive jobs, etc. |

|||

Compute node specification: |

Compute node specification: |

||

| Line 27: | Line 29: | ||

! style="width:10%"| GPU (A30) |

! style="width:10%"| GPU (A30) |

||

! style="width:10%"| GPU (A100) |

! style="width:10%"| GPU (A100) |

||

! style="width:10%"| GPU (H200) |

|||

|- |

|- |

||

!scope="column"| Quantity |

!scope="column"| Quantity |

||

| |

| 168 / 12 |

||

| 14 |

| 14 / 2 |

||

| 32 |

| 32 |

||

| 8 |

| 8 |

||

| 4 |

|||

|- |

|- |

||

!scope="column" | Processors |

!scope="column" | Processors |

||

| 2 x [https://www.amd.com/de/products/ |

| 2 x [https://www.amd.com/de/products/processors/server/epyc/7003-series/amd-epyc-7543.html AMD EPYC Milan 7543] / 2 x [https://www.amd.com/en/products/processors/server/epyc/7003-series/amd-epyc-75f3.html AMD EPYC Milan 75F3] |

||

| 2 x [https://www.amd.com/de/products/ |

| 2 x [https://www.amd.com/de/products/processors/server/epyc/7003-series/amd-epyc-7443.html AMD EPYC Milan 7443] / 2 x [https://www.amd.com/en/products/processors/server/epyc/7003-series/amd-epyc-75f3.html AMD EPYC Milan 75F3] |

||

| 2 x [https://www.amd.com/de/products/ |

| 2 x [https://www.amd.com/de/products/processors/server/epyc/7003-series/amd-epyc-7543.html AMD EPYC Milan 7543] |

||

| 2 x [https://www.amd.com/de/products/ |

| 2 x [https://www.amd.com/de/products/processors/server/epyc/7003-series/amd-epyc-7543.html AMD EPYC Milan 7543] |

||

| 2 x [https://www.amd.com/de/products/processors/server/epyc/9005-series/amd-epyc-9555.html AMD EPYC Milan 9555] |

|||

|- |

|- |

||

!scope="column" | Processor Frequency (GHz) |

!scope="column" | Processor Base Frequency (GHz) |

||

| 2.80 |

| 2.80 / 2.95 |

||

| 2.85 |

| 2.85 / 2.95 |

||

| 2.80 |

| 2.80 |

||

| 2.80 |

| 2.80 |

||

| 3.20 |

|||

|- |

|- |

||

!scope="column" | Number of Cores |

!scope="column" | Number of Physical Cores / Hypertreads |

||

| 64 |

| 64 / 128 |

||

| 48 / 96 // 64 / 128 |

|||

| 48 |

|||

| 64 |

| 64 / 128 |

||

| 64 |

| 64 / 128 |

||

| 128 / 256 |

|||

|- |

|- |

||

!scope="column" | Working Memory (GB) |

!scope="column" | Working Memory (GB) |

||

| Line 57: | Line 64: | ||

| 512 |

| 512 |

||

| 512 |

| 512 |

||

| 1536 |

|||

|- |

|- |

||

!scope="column" | Local Disk ( |

!scope="column" | Local Disk (GiB) |

||

| |

| 450 (NVMe-SSD) |

||

| |

| 14000 (NVMe-SSD) |

||

| |

| 450 (NVMe-SSD) |

||

| |

| 14000 (NVMe-SSD) |

||

| 28000 (NVMe-SSD) |

|||

|- |

|- |

||

!scope="column" | Interconnect |

!scope="column" | Interconnect |

||

| HDR IB ( |

| HDR 100 IB (84 nodes) / 100GbE (96 nodes) |

||

| |

| 100GbE |

||

| |

| 100GbE |

||

| |

| 100GbE |

||

| HDR 200 IB + 100GbE |

|||

|- |

|- |

||

!scope="column" | Coprocessors |

!scope="column" | Coprocessors |

||

| - |

| - |

||

| - |

| - |

||

| 2 x [https://www.nvidia.com/de-de/data-center/products/a30-gpu/ NVIDIA A30 (24 GB ECC HBM2, NVLink] |

| 2 x [https://www.nvidia.com/de-de/data-center/products/a30-gpu/ NVIDIA A30 (24 GB ECC HBM2, NVLink)] |

||

| 4 x [https://www.nvidia.com/de-de/data-center/a100/ NVIDIA A100 (80 GB ECC HBM2e)] |

| 4 x [https://www.nvidia.com/de-de/data-center/a100/ NVIDIA A100 (80 GB ECC HBM2e)] |

||

| 4 x [https://www.nvidia.com/de-de/data-center/h200/ NVIDIA H200 NVL (141 GB ECC HBM3e, NVLink)] |

|||

|} |

|} |

||

= Network = |

|||

The compute nodes and the parallel file system are connected via 100GbE ethernet</br> |

|||

In contrast to BinAC 1 not all compute nodes are connected via Infiniband, but there are 84 standard compute nodes connected via HDR Infiniband (100 GbE). In order to get your jobs onto the Infiniband nodes, submit your job with <code>--constraint=ib</code>. |

|||

'''Question:'''</br> |

|||

OpenMPI throws the following warning: |

|||

<pre> |

|||

-------------------------------------------------------------------------- |

|||

No OpenFabrics connection schemes reported that they were able to be |

|||

used on a specific port. As such, the openib BTL (OpenFabrics |

|||

support) will be disabled for this port. |

|||

Local host: node1-083 |

|||

Local device: mlx5_0 |

|||

Local port: 1 |

|||

CPCs attempted: rdmacm, udcm |

|||

-------------------------------------------------------------------------- |

|||

[node1-083:2137377] 3 more processes have sent help message help-mpi-btl-openib-cpc-base.txt / no cpcs for port |

|||

[node1-083:2137377] Set MCA parameter "orte_base_help_aggregate" to 0 to see all help / error messages |

|||

</pre> |

|||

What should i do? |

|||

'''Answer:'''</br> |

|||

=== Special Purpose Nodes === |

|||

BinAC2 has two (almost) separate networks, a 100GbE network and and InfiniBand network, both connecting a subset of the nodes. Both networks require different cables and switches. |

|||

Concerning the network cards for the nodes, however, there exist VPI network cards which can be configured to work in either mode (https://docs.nvidia.com/networking/display/connectx6vpi/specifications#src-2487215234_Specifications-MCX653105A-ECATSpecifications). |

|||

OpenMPI can use a number of layers for transferring data and messages between processes. When it ramps up, it will test all means of communication that were configured during compilation and then tries to figure out the fastest path between all processes. |

|||

If OpenMPI encounters such a VPI card, it will first try to establish a Remote Direct Memory Access communication (RDMA) channel using the OpenFabrics (OFI) layer. |

|||

On nodes with 100Gb ethernet, this fails as there is no RDMA protocol configured. OpenMPI will fall back to TCP transport but not without complaints. |

|||

'''Workaround:'''</br> |

|||

Besides the classical compute node several nodes serve as login and preprocessing nodes, nodes for interactive jobs and nodes for creating virtual environments providing a virtual service environment. |

|||

For single-node jobs or on regular compute nodes, A30 and A100 GPU nodes: Add the lines |

|||

<pre> |

|||

export OMPI_MCA_btl="^ofi,openib" |

|||

export OMPI_MCA_mtl="^ofi" |

|||

</pre> |

|||

to your job script to disable the OFI transport layer. If you need high-bandwidth, low-latency transport between all processes on all nodes, switch to the Infiniband partition (<code>#SBATCH --constraint=ib</code>). ''Do not turn off the OFI layer on Infiniband nodes as this will be the best choice between nodes!'' |

|||

= File Systems = |

|||

== Storage Architecture == |

|||

The bwForCluster BinAC 2 consists of two separate storage systems, one for the user's home directory $HOME and one serving as a project/work space. |

|||

The bwForCluster [https://www.binac.uni-tuebingen.de BinAC] consists of two separate storage systems, one for the user's home directory <tt>$HOME</tt> and one serving as a work space. The home directory is limited in space and parallel access but offers snapshots of your files and Backup. The work space is a parallel file system which offers fast and parallel file access and a bigger capacity than the home directory. This storage is based on [https://www.beegfs.com/ BeeGFS] and can be accessed parallel from many nodes. Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the <tt>$TMPDIR</tt> environment variable. |

|||

The home directory is limited in space and parallel access but offers snapshots of your files and backup. |

|||

The project/work is a parallel file system (PFS) which offers fast and parallel file access and a bigger capacity than the home directory. It is mounted at <code>/pfs/10</code> on the login and compute nodes. This storage is based on Lustre and can be accessed in parallel from many nodes. The PFS contains the project and the work directory. Each compute project has its own directory at <code>/pfs/10/project</code> that is accessible for all members of the compute project. |

|||

Each user can create workspaces under <code>/pfs/10/work</code> using the workspace tools. These directories are only accessible for the user who created the workspace. |

|||

Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the $TMPDIR environment variable. |

|||

{| class="wikitable" |

{| class="wikitable" |

||

| Line 92: | Line 139: | ||

! style="width:10%"| |

! style="width:10%"| |

||

! style="width:10%"| <tt>$HOME</tt> |

! style="width:10%"| <tt>$HOME</tt> |

||

! style="width:10%"| |

! style="width:10%"| project |

||

! style="width:10%"| work |

|||

! style="width:10%"| <tt>$TMPDIR</tt> |

! style="width:10%"| <tt>$TMPDIR</tt> |

||

|- |

|- |

||

!scope="column" | Visibility |

!scope="column" | Visibility |

||

| global |

| global |

||

| global |

| global |

||

| global |

|||

| node local |

| node local |

||

|- |

|- |

||

!scope="column" | Lifetime |

!scope="column" | Lifetime |

||

| permanent |

| permanent |

||

| permanent |

|||

| work space lifetime (max. 30 days, max. 3 extensions) |

|||

| work space lifetime (max. 30 days, max. 5 extensions) |

|||

| batch job walltime |

| batch job walltime |

||

|- |

|- |

||

!scope="column" | Capacity |

!scope="column" | Capacity |

||

| |

| - |

||

| |

| 8.1 PB |

||

| 1000 TB |

|||

| 211 GB per node |

|||

| 480 GB (compute nodes); 7.7 TB (GPU-A30 nodes); 16 TB (GPU-A100 and SMP nodes); 31 TB (GPU-H200 nodes) |

|||

|- |

|||

!scope="column" | File System Type |

|||

| NFS |

|||

| Lustre |

|||

| Lustre |

|||

| XFS |

|||

|- |

|||

!scope="column" | Speed (read) |

|||

| ≈ 1 GB/s, shared by all nodes |

|||

| max. 12 GB/s |

|||

| ≈ 145 GB/s peak, aggregated over 56 nodes, ideal striping |

|||

| ≈ 3 GB/s (compute)/ ≈5 GB/S (GPUA-30)/ ≈ 26 GB/s (GPU-A100 + SMP)/ ≈ 42 GB/s (GPU-H200) per node |

|||

|- |

|- |

||

!scope="column" | [https://en.wikipedia.org/wiki/Disk_quota#Quotas Quotas] |

!scope="column" | [https://en.wikipedia.org/wiki/Disk_quota#Quotas Quotas] |

||

| 40 GB per user |

| 40 GB per user |

||

| not yet, maybe in the future |

|||

| none |

| none |

||

| none |

| none |

||

|- |

|- |

||

!scope="column" | Backup |

!scope="column" | Backup |

||

| yes |

| yes (nightly) |

||

| no |

| '''no''' |

||

| no |

| '''no''' |

||

| '''no''' |

|||

|} |

|} |

||

| Line 126: | Line 191: | ||

batch job walltime : files are removed at end of the batch job |

batch job walltime : files are removed at end of the batch job |

||

{| class="wikitable" style="color:red; background-color:#ffffcc;" cellpadding="10" |

|||

| |

|||

Please note that due to the large capacity of '''work''' and '''project''' and due to frequent file changes on these file systems, no backup can be provided.</br> |

|||

Backing up these file systems would require a redundant storage facility with multiple times the capacity of '''project'''. Furthermore, regular backups would significantly degrade the performance.</br> |

|||

Data is stored redundantly, i.e. immune against disk failures but not immune against catastrophic incidents like cyber attacks or a fire in the server room.</br> |

|||

Please consider to use on of the remote storage facilities like [https://wiki.bwhpc.de/e/SDS@hd SDS@hd], [https://uni-tuebingen.de/einrichtungen/zentrum-fuer-datenverarbeitung/projekte/laufende-projekte/bwsfs bwSFS], [https://www.scc.kit.edu/en/services/lsdf.php LSFD Online Storage] or the [https://www.rda.kit.edu/english/ bwDataArchive] to back up your valuable data. |

|||

|} |

|||

=== $HOME === |

|||

=== Home === |

|||

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. |

|||

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. |

|||

Because the backup space is limited we enforce a quota of 40GB on the home directories. |

|||

'''NOTE:''' |

|||

Compute jobs on nodes must not write temporary data to $HOME. |

|||

Instead they should use the local $TMPDIR directory for I/O-heavy use cases |

|||

and work spaces for less I/O intense multinode-jobs. |

|||

<!-- |

<!-- |

||

Current disk usage on home directory and quota status can be checked with the '''diskusage''' command: |

Current disk usage on home directory and quota status can be checked with the '''diskusage''' command: |

||

$ diskusage |

$ diskusage |

||

| Line 138: | Line 217: | ||

------------------------------------------------------------------------ |

------------------------------------------------------------------------ |

||

<username> 4.38 100.00 4.38 |

<username> 4.38 100.00 4.38 |

||

--> |

--> |

||

=== Project === |

|||

The data is stored on HDDs. The primary focus of <code>/pfs/10/project</code> is pure capacity, not speed. |

|||

NOTE: |

|||

Compute jobs on nodes must not write temporary data to $HOME. |

|||

Instead they should use the local $TMPDIR directory for I/O-heavy use cases |

|||

and work spaces for less I/O intense multinode-jobs. |

|||

Every project gets a dedicated directory located at: |

|||

<syntaxhighlight> |

|||

<!-- |

|||

/pfs/10/project/<project_id>/ |

|||

'''Quota is full - what to do''' |

|||

</syntaxhighlight> |

|||

You can check the project(s) you are member of via: |

|||

In case of 100% usage of the quota user can get some problems with disk writing operations (e.g. error messages during the file copy/edit/save operations). To avoid it - please remove some data that you don't need from the $HOME directory or move it to some temporary place. |

|||

<syntaxhighlight> |

|||

As temporary place for the data user can use: |

|||

# id $USER | grep -o 'bw[^)]*' |

|||

bw16f003 |

|||

</syntaxhighlight> |

|||

In this case, your project directory would be: |

|||

* '''Workspace''' - space on the BeeGFS file system, lifetime up to 90 days (see below) |

|||

<code> |

|||

/pfs/10/project/bw16f003/ |

|||

</code> |

|||

Check our [[BinAC2/Project_Data_Organization | data organization guide ]] for methods to organize data inside the project directory. |

|||

* '''Scratch on login nodes''' - special directory on every login node (login01..login03): |

|||

** Access via variable $TMPDIR (e.g. "cd $TMPDIR") |

|||

** Lifetime of data - minimum 7 days (based on the last access time) |

|||

** Data is private for every user |

|||

** Each login node has own scratch directory (data is NOT shared) |

|||

** There is NO backup of the data |

|||

=== Workspaces === |

|||

To get optimal and comfortable work with the $HOME directory is important to keep the data in order (remove unnecessary and temporary data, archive big files, save large files only on the workspace). |

|||

--> |

|||

Data on the fast storage pool at <code>/pfs/10/work</code> is stored on SSDs. |

|||

The primary focus of this filesystem is speed, not capacity.<br /> |

|||

Data on <code>/pfs/10/work</code> is organized into so-called ''workspaces''. |

|||

A workspace is a directory that you create and manage using the workspace tools. |

|||

{|style="background:#deffee; width:100%;" |

|||

=== Work Space === |

|||

|style="padding:5px; background:#cef2e0; text-align:left"| |

|||

[[Image:Attention.svg|center|25px]] |

|||

|style="padding:5px; background:#cef2e0; text-align:left"| |

|||

Please always remember, that workspaces are intended solely for temporary work data, and there is no backup of data in the workspaces.<br /> |

|||

Of you don't extend your workspaces they will be deleted at some point. |

|||

|} |

|||

==== Workspace tools documentation ==== |

|||

You can find more information about the workspace tools in the general documentation: |

|||

:: → '''[[Workspace | General workspace tools documentation]]''' |

|||

==== BinAC 2 workspace policies and limits ==== |

|||

Some values and behaviors described in the general workspace documentation do not apply to BinAC 2.<br /> |

|||

On BinAC 2, workspaces: |

|||

* have a '''lifetime of 30 days''' |

|||

* can be extended up to '''five times''' |

|||

Because the capacity of <code>/pfs/10/work</code> is limited, workspace expiration is enforced. |

|||

You will receive automated email reminders one week before a workspace expires. |

|||

If a workspace’s lifetime expires (i.e. is not extended), it is moved to the special directory |

|||

<code>/pfs/10/work/.removed</code>. |

|||

Expired workspaces can be restored for a keep time of 14 days. |

|||

After this period, expired workspaces are moved to a special location in the project filesystem. |

|||

After at least six months, a workspace is considered abandoned and is permanently deleted. |

|||

In addition to the standard workspace tools, we provide the script |

|||

<syntaxhighlight inline>ws_shelve</syntaxhighlight> for copying a workspace to a project directory. |

|||

<syntaxhighlight inline>ws_shelve</syntaxhighlight> takes two arguments: |

|||

* the name of the workspace to copy |

|||

* the acronym of your project |

|||

This command creates and submits a Slurm job that uses <code>rsync</code> to copy the workspace |

|||

from <code>/pfs/10/work</code> to <code>/pfs/10/project</code>. |

|||

A new directory is created with a timestamp suffix to prevent name collisions. |

|||

<syntaxhighlight> |

|||

# Copy the workspace /pfs/10/work/tu_xyz01-test to /pfs/10/project/bw10a001 |

|||

$ ws_shelve test bw10a001 |

|||

Workspace shelving submitted. |

|||

Job ID: 2058525 |

|||

Logs will be written to: |

|||

/pfs/10/project/bw10a001/.ws_shelve/tu_xyz01/logs |

|||

</syntaxhighlight> |

|||

In this example, the workspace is copied to |

|||

<syntaxhighlight inline>/pfs/10/project/bw10a001/tu_xyz01/test-<timestamp></syntaxhighlight>. |

|||

Work spaces can be generated through the <tt>workspace</tt> tools. This will generate a directory on the parallel storage. |

|||

To create a work space you'll need to supply a name for your work space area and a lifetime in days. |

|||

For more information read the corresponding help, e.g: <tt>ws_allocate -h</tt>. |

|||

Examples: |

|||

{| class="wikitable" |

|||

|- |

|||

!style="width:30%" | Command |

|||

!style="width:70%" | Action |

|||

|- |

|||

|<tt>ws_allocate mywork 30</tt> |

|||

|Allocate a work space named "mywork" for 30 days. |

|||

|- |

|||

|<tt>ws_allocate myotherwork</tt> |

|||

|Allocate a work space named "myotherwork" with maximum lifetime. |

|||

|- |

|||

|<tt>ws_list -a</tt> |

|||

|List all your work spaces. |

|||

|- |

|||

|<tt>ws_find mywork</tt> |

|||

|Get absolute path of work space "mywork". |

|||

|- |

|||

|<tt>ws_extend mywork 30</tt> |

|||

|Extend life me of work space mywork by 30 days from now. (Not needed, workspaces on BinAC are not limited). |

|||

|- |

|||

|<tt>ws_release mywork</tt> |

|||

|Manually erase your work space "mywork". Please remove directory content first. |

|||

|- |

|||

|} |

|||

=== Local Disk Space === |

|||

All compute nodes are equipped with a local SSD with 200 GB capacity for job execution. During computation the environment variable <tt>$TMPDIR</tt> points to this local disk space. The data will become unavailable as soon as the job has finished. |

|||

=== SDS@hd === |

=== SDS@hd === |

||

SDS@hd is mounted |

SDS@hd is mounted via NFS on login and compute nodes at <syntaxhighlight inline>/mnt/sds-hd</syntaxhighlight>. |

||

To access your Speichervorhaben, please see the [[SDS@hd/Access/NFS#access_your_data|SDS@hd documentation]]. |

|||

To access your Speichervorhaben, the export to BinAC 2 must first be enabled by the SDS@hd-Team. Please contact [mailto:sds-hd-support@urz.uni-heidelberg.de SDS@hd support] and provide the acronym of your Speichervorhaben, along with a request to enable the export to BinAC 2. |

|||

If you can't see your Speichervorhaben, you can [[BinAC/Support|open a ticket]]. |

|||

Once this has been done, you can access your Speichervorhaben as described in the [https://wiki.bwhpc.de/e/SDS@hd/Access/NFS#Access_your_data SDS documentation]. |

|||

<syntaxhighlight> |

|||

$ kinit $USER |

|||

Password for <user>@BWSERVICES.UNI-HEIDELBERG.DE: |

|||

</syntaxhighlight> |

|||

The Kerberos ticket store is shared across all nodes. Creating a single ticket is sufficient to access your Speichervorhaben on all nodes. |

|||

=== More Details on the Lustre File System === |

|||

[https://www.lustre.org/ Lustre] is a distributed parallel file system. |

|||

* The entire logical volume as presented to the user is formed by multiple physical or local drives. Data is distributed over more than one physical or logical volume/hard drive, single files can be larger than the capacity of a single hard drive. |

|||

* The file system can be mounted from all nodes ("clients") in parallel at the same time for reading and writing. <i>This also means that technically you can write to the same file from two different compute nodes! Usually, this will create an unpredictable mess! Never ever do this unless you know <b>exactly</b> what you are doing!</i> |

|||

* On a single server or client, the bandwidth of multiple network interfaces can be aggregated to increase the throughput ("multi-rail"). |

|||

Lustre works by chopping files into many small parts ("stripes", file objects) which are then stored on the object storage servers. The information which part of the file is stored where on which object storage server, when it was changed last etc. and the entire directory structure is stored on the metadata servers. Think of the entries on the metadata server as being pointers pointing to the actual file objects on the object storage servers. |

|||

A Lustre file system can consist of many metadata servers (MDS) and object storage servers (OSS). |

|||

Each MDS or OSS can again hold one or more so-called object storage targets (OST) or metadata targets (MDT) which can e.g. be simply multiple hard drives. |

|||

The capacity of a Lustre file system can hence be easily scaled by adding more servers. |

|||

==== Useful Lustre Comamnds ==== |

|||

Commands specific to the Lustre file system are divided into user commands (<code>lfs ...</code>) and administrative commands (<code>lctl ...</code>). On BinAC2, users may only execute user commands, and also not all of them. |

|||

* <code>lfs help <command></code>: Print built-in help for command; Alternative: <code>man lfs <command></code> |

|||

* <code>lfs find</code>: Drop-in replacement for the <code>find</code> command, much faster on Lustre filesystems as it directly talks to the metadata sever |

|||

* <code>lfs --list-commands</code>: Print a list of available commands |

|||

==== Moving data between WORK and PROJECT ==== |

|||

<b>!! IMPORTANT !!</b> Calling <code>mv</code> on files will <i>not</i> physically move them between the fast and the slow pool of the file system. Instead, the file metadata, i.e. the path to the file in the directory tree will be modified (i.e. data stored on the MDS). The stripes of the file on the OSS, however, will remain exactly were they were. The only result will be the confusing situation that you now have metadata entries under <code>/pfs/10/project</code> that still point to WORK OSTs. This may sound confusing at first. When using <code>mv</code> on the same file system, Lustre only renames the files and makes them available from a different path. The pointers to the file objects on the OSS stay identical. This will only change if you either create a copy of the file at a different path (with <code>cp</code> or <code>rsync</code>, e.g.) or if you explicitly instruct Lustre to move the actual file objects to another storage location, e.g. another pool of the same file system. |

|||

<b>Proper ways of moving data between the pools</b></br> |

|||

* Copy the data - which will create new files -, then delete the old files. Example: |

|||

<pre> |

|||

$> cp -r /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. |

|||

$> rm -rf /pfs/10/work/tu_abcde01-my-precious-ws/* |

|||

$> ws_release --delete-data my-precious-ws |

|||

</pre> |

|||

* Alternative to copy: use <code>rsync</code> to copy data between the workspace and the project directories. Example: |

|||

<pre> |

|||

$> rsync -av /pfs/10/work/tu_abcde01-my-precious-ws/simulation/output /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/simulation25/ |

|||

$> rm -rf /pfs/10/work/tu_abcde01-my-precious-ws/* |

|||

$> ws_release --delete-data my-precious-ws |

|||

</pre> |

|||

* If there are many subfolders with similar size, you can use <code>xargs</code> to copy them in parallel: |

|||

<pre> |

|||

$> find . -maxdepth 1 -mindepth 1 -type d -print | xargs -P4 -I{} rsync -aHAXW --inplace --update {} /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/simulation25/ |

|||

</pre> |

|||

will launch four parallel <code>rsync</code> processes at a time, each will copy one of the subdirectories. |

|||

* First move the metadata with <code>mv</code>, then use <code>lfs migrate</code> or the wrapper <code>lfs_migrate</code> to actually migrate the file stripes. This is also a possible resolution if you already <code>mv</code>ed data from <code>work</code> to <code>project</code> or vice versa. |

|||

** <code>lfs migrate</code> is the raw lustre command. It can only operate on one file at a time, but offers access to all options. |

|||

** <code>lfs_migrate</code> is a versatile wrapper script that can work on single files or recursively on entire directories. If available, it will try to use <code>lfs migrate</code>, otherwise it will fall back to <code>rsync</code> (see <code>lfs_migrate --help</code> for all options.)</br> |

|||

Example with <code>lfs migrate</code>: |

|||

<pre> |

|||

$> mv /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. |

|||

$> cd /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. |

|||

$> lfs find . -type f --pool work -0 | xargs -0 lfs migrate --pool project # find all files whose file objects are on the work pool and migrate the objects to the project pool |

|||

$> ws_release --delete-data my-precious-ws |

|||

</pre> |

|||

Example with <code>lfs_migrate</code>: |

|||

<pre> |

|||

$> mv /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. |

|||

$> cd /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. |

|||

$> lfs_migrate --yes -q -p project * # migrate all file objects in the current directory to the project pool, be quiet (-q) and do not ask for confirmation (--yes) |

|||

$> ws_release --delete-data my-precious-ws |

|||

</pre> |

|||

Both migration commands can also be combined with options to restripe the files during migration, i.e. you can also change the number of OSTs the file is striped over, the size of a single strip etc.</br> |

|||

<b>Attention!</b> Both <code>lfs migrate</code> and <code>lfs_migrate</code> will <i>not</i> change the path of the file(s), you must also <code>mv</code> them! If used without <code>mv</code>, the files will still belong to the workspace although their file object stripes are now on the <code>project</code> pool and a subsequent <code>rm</code> in the workspace will wipe them. |

|||

All of the above procedures may take a considerable amount of time depending on the amount of data, so it might be advisable to execute them in a terminal multiplexer like <code>screen</code> or <code>tmux</code> or wrap them into small SLURM jobs with <code>sbatch --wrap="<command>"</code>. |

|||

<b>Question</b>:</br> I totally lost overview, how do i find out where my files are located? |

|||

<b>Answer</b>:</br> |

|||

* Use <code>lfs find</code> to find files on a specific pool. Example: |

|||

<pre> |

|||

$> lfs find . --pool project # recursively find all files in the current directory whose file objects are on the "project" pool |

|||

</pre> |

|||

* Use <code>lfs getstripe</code> to query the striping pattern and the pool (also works recursively if called with a directory). Example: |

|||

<pre> |

|||

$> lfs getstripe parameter.h |

|||

parameter.h |

|||

lmm_stripe_count: 1 |

|||

lmm_stripe_size: 1048576 |

|||

lmm_pattern: raid0 |

|||

lmm_layout_gen: 1 |

|||

lmm_stripe_offset: 44 |

|||

lmm_pool: project |

|||

obdidx objid objid group |

|||

44 7991938 0x79f282 0xd80000400 |

|||

</pre> |

|||

shows that the file is striped over OST 44 (obdidx) which belongs to pool project (lmm_pool). |

|||

<b>Why pathes and storage pools should match:</b></br> |

|||

There are four different possible scenarios with two subdirectories and two pools: |

|||

* File path in <code>/pfs/10/work</code>, file objects on pool <code>work</code>: <b>good.</b> |

|||

* File path in <code>/pfs/10/project</code>, file objects on pool <code>project</code>: <b>good.</b> |

|||

* File path in <code>/pfs/10/project</code>, file objects on pool <code>work</code>: <b>bad</b>. This will "leak" storage from the fast pool, making it unavailable for workspaces. |

|||

* File path in <code>/pfs/10/work</code>, file objects on pool <code>project</code>: <b>bad</b>. Access will be slow, and if (volatile) workspaces are purged, data residing on <code>project</code> will (voluntarily or involuntarily) be deleted. |

|||

The latter two situations may arise from <code>mv</code>ing data between workspaces and project folders. |

|||

==== More on data striping and how to influence it ==== |

|||

<b>!! The default striping patterns on BinAC2 are set for good reasons and should not light-heartedly be changed!</br> Doing so wrongly will in the best case only hurt your performance.</br> In the worst case, it will also hurt all other users and endanger the stability of the cluster.</br> Please talk to the admins first if you think that you need a non-default pattern.</b> |

|||

* Reading striping patterns with <code>lfs getstripe</code> |

|||

* Setting striping patterns with <code>lfs setstripe</code> for new files and directories |

|||

* Restriping files with <code>lfs migrate</code> |

|||

* Progressive File Layout |

|||

==== Architecture of BinAC2's Lustre File System ==== |

|||

<b>Metadata Servers:</b> |

|||

* 2 metadata servers |

|||

* 1 MDT per server |

|||

* MDT Capacity: 31TB, hardware RAID6 on NVMe drives (flash memory/SSD) |

|||

* Networking: 2x 100 GbE, 2x HDR-100 InfiniBand |

|||

<b>Object storage servers:</b> |

|||

* 8 object storage servers |

|||

* 2 fast OSTs per server |

|||

** 70 TB per OST, software RAID (raid-z2, 10+2 reduncancy) |

|||

** NVMe drives, directly attached to the PCIe bus |

|||

* 8 slow OSTs per server |

|||

** 143 TB per OST, hardware RAID (RAID6, 8+2 redundancy) |

|||

** externally attached via SAS |

|||

* Networking: 2x 100 GbE, 2x HDR-100 InfiniBand |

|||

* All fast OSTs are assigned to the pool <code>work</code> |

|||

* All slow OSTs are assigned to the pool <code>project</code> |

|||

* All files that are created under <code>/pfs/10/work</code> are by default stored on the fast pool |

|||

* All files that are created under <code>/pfs/10/project</code> are by default stored on the slow pool |

|||

* Metadata is distributed over both MDTs. All subdirectories of a directory (workspace or project folder) are typically on the same MDT. Directory striping/placement on MDTs can not be influenced by users. |

|||

* Default OST striping: Stripes have size 1 MiB. Files are striped over one OST if possible, i.e. all stripes of a file are on the same OST. New files are created on the most empty OST. |

|||

Internally, the slow and the fast pool belong to the same Lustre file system and namespace. |

|||

More reading: |

|||

* [https://doc.lustre.org/lustre_manual.xhtml The Lustre 2.X Manual] ([http://doc.lustre.org/lustre_manual.pdf PDF]) |

|||

* [https://wiki.lustre.org/Main_Page The Lustre Wiki] |

|||

Latest revision as of 12:45, 21 January 2026

Hardware and Architecture

The bwForCluster BinAC 2 supports researchers from the broader fields of Bioinformatics, Medical Informatics, Astrophysics, Geosciences and Pharmacy.

Operating System and Software

- Operating System: Rocky Linux 9.6

- Queuing System: Slurm (see BinAC2/Slurm for help)

- (Scientific) Libraries and Software: Environment Modules

Compute Nodes

BinAC 2 offers compute nodes, high-mem nodes, and three types of GPU nodes.

- 180 compute nodes

- 16 SMP node

- 32 GPU nodes (2xA30)

- 8 GPU nodes (4xA100)

- 4 GPU nodes (4xH200)

- plus several special purpose nodes for login, interactive jobs, etc.

Compute node specification:

| Standard | High-Mem | GPU (A30) | GPU (A100) | GPU (H200) | |

|---|---|---|---|---|---|

| Quantity | 168 / 12 | 14 / 2 | 32 | 8 | 4 |

| Processors | 2 x AMD EPYC Milan 7543 / 2 x AMD EPYC Milan 75F3 | 2 x AMD EPYC Milan 7443 / 2 x AMD EPYC Milan 75F3 | 2 x AMD EPYC Milan 7543 | 2 x AMD EPYC Milan 7543 | 2 x AMD EPYC Milan 9555 |

| Processor Base Frequency (GHz) | 2.80 / 2.95 | 2.85 / 2.95 | 2.80 | 2.80 | 3.20 |

| Number of Physical Cores / Hypertreads | 64 / 128 | 48 / 96 // 64 / 128 | 64 / 128 | 64 / 128 | 128 / 256 |

| Working Memory (GB) | 512 | 2048 | 512 | 512 | 1536 |

| Local Disk (GiB) | 450 (NVMe-SSD) | 14000 (NVMe-SSD) | 450 (NVMe-SSD) | 14000 (NVMe-SSD) | 28000 (NVMe-SSD) |

| Interconnect | HDR 100 IB (84 nodes) / 100GbE (96 nodes) | 100GbE | 100GbE | 100GbE | HDR 200 IB + 100GbE |

| Coprocessors | - | - | 2 x NVIDIA A30 (24 GB ECC HBM2, NVLink) | 4 x NVIDIA A100 (80 GB ECC HBM2e) | 4 x NVIDIA H200 NVL (141 GB ECC HBM3e, NVLink) |

Network

The compute nodes and the parallel file system are connected via 100GbE ethernet

In contrast to BinAC 1 not all compute nodes are connected via Infiniband, but there are 84 standard compute nodes connected via HDR Infiniband (100 GbE). In order to get your jobs onto the Infiniband nodes, submit your job with --constraint=ib.

Question:

OpenMPI throws the following warning:

-------------------------------------------------------------------------- No OpenFabrics connection schemes reported that they were able to be used on a specific port. As such, the openib BTL (OpenFabrics support) will be disabled for this port. Local host: node1-083 Local device: mlx5_0 Local port: 1 CPCs attempted: rdmacm, udcm -------------------------------------------------------------------------- [node1-083:2137377] 3 more processes have sent help message help-mpi-btl-openib-cpc-base.txt / no cpcs for port [node1-083:2137377] Set MCA parameter "orte_base_help_aggregate" to 0 to see all help / error messages

What should i do?

Answer:

BinAC2 has two (almost) separate networks, a 100GbE network and and InfiniBand network, both connecting a subset of the nodes. Both networks require different cables and switches.

Concerning the network cards for the nodes, however, there exist VPI network cards which can be configured to work in either mode (https://docs.nvidia.com/networking/display/connectx6vpi/specifications#src-2487215234_Specifications-MCX653105A-ECATSpecifications).

OpenMPI can use a number of layers for transferring data and messages between processes. When it ramps up, it will test all means of communication that were configured during compilation and then tries to figure out the fastest path between all processes.

If OpenMPI encounters such a VPI card, it will first try to establish a Remote Direct Memory Access communication (RDMA) channel using the OpenFabrics (OFI) layer.

On nodes with 100Gb ethernet, this fails as there is no RDMA protocol configured. OpenMPI will fall back to TCP transport but not without complaints.

Workaround:

For single-node jobs or on regular compute nodes, A30 and A100 GPU nodes: Add the lines

export OMPI_MCA_btl="^ofi,openib" export OMPI_MCA_mtl="^ofi"

to your job script to disable the OFI transport layer. If you need high-bandwidth, low-latency transport between all processes on all nodes, switch to the Infiniband partition (#SBATCH --constraint=ib). Do not turn off the OFI layer on Infiniband nodes as this will be the best choice between nodes!

File Systems

The bwForCluster BinAC 2 consists of two separate storage systems, one for the user's home directory $HOME and one serving as a project/work space. The home directory is limited in space and parallel access but offers snapshots of your files and backup.

The project/work is a parallel file system (PFS) which offers fast and parallel file access and a bigger capacity than the home directory. It is mounted at /pfs/10 on the login and compute nodes. This storage is based on Lustre and can be accessed in parallel from many nodes. The PFS contains the project and the work directory. Each compute project has its own directory at /pfs/10/project that is accessible for all members of the compute project.

Each user can create workspaces under /pfs/10/work using the workspace tools. These directories are only accessible for the user who created the workspace.

Additionally, each compute node provides high-speed temporary storage (SSD) on the node-local solid state disk via the $TMPDIR environment variable.

| $HOME | project | work | $TMPDIR | |

|---|---|---|---|---|

| Visibility | global | global | global | node local |

| Lifetime | permanent | permanent | work space lifetime (max. 30 days, max. 5 extensions) | batch job walltime |

| Capacity | - | 8.1 PB | 1000 TB | 480 GB (compute nodes); 7.7 TB (GPU-A30 nodes); 16 TB (GPU-A100 and SMP nodes); 31 TB (GPU-H200 nodes) |

| File System Type | NFS | Lustre | Lustre | XFS |

| Speed (read) | ≈ 1 GB/s, shared by all nodes | max. 12 GB/s | ≈ 145 GB/s peak, aggregated over 56 nodes, ideal striping | ≈ 3 GB/s (compute)/ ≈5 GB/S (GPUA-30)/ ≈ 26 GB/s (GPU-A100 + SMP)/ ≈ 42 GB/s (GPU-H200) per node |

| Quotas | 40 GB per user | not yet, maybe in the future | none | none |

| Backup | yes (nightly) | no | no | no |

global : all nodes access the same file system local : each node has its own file system permanent : files are stored permanently batch job walltime : files are removed at end of the batch job

|

Please note that due to the large capacity of work and project and due to frequent file changes on these file systems, no backup can be provided. |

Home

Home directories are meant for permanent file storage of files that are keep being used like source codes, configuration files, executable programs etc.; the content of home directories will be backed up on a regular basis. Because the backup space is limited we enforce a quota of 40GB on the home directories.

NOTE: Compute jobs on nodes must not write temporary data to $HOME. Instead they should use the local $TMPDIR directory for I/O-heavy use cases and work spaces for less I/O intense multinode-jobs.

Project

The data is stored on HDDs. The primary focus of /pfs/10/project is pure capacity, not speed.

Every project gets a dedicated directory located at:

/pfs/10/project/<project_id>/You can check the project(s) you are member of via:

# id $USER | grep -o 'bw[^)]*'

bw16f003In this case, your project directory would be:

/pfs/10/project/bw16f003/

Check our data organization guide for methods to organize data inside the project directory.

Workspaces

Data on the fast storage pool at /pfs/10/work is stored on SSDs.

The primary focus of this filesystem is speed, not capacity.

Data on /pfs/10/work is organized into so-called workspaces.

A workspace is a directory that you create and manage using the workspace tools.

|

Please always remember, that workspaces are intended solely for temporary work data, and there is no backup of data in the workspaces. |

Workspace tools documentation

You can find more information about the workspace tools in the general documentation:

BinAC 2 workspace policies and limits

Some values and behaviors described in the general workspace documentation do not apply to BinAC 2.

On BinAC 2, workspaces:

- have a lifetime of 30 days

- can be extended up to five times

Because the capacity of /pfs/10/work is limited, workspace expiration is enforced.

You will receive automated email reminders one week before a workspace expires.

If a workspace’s lifetime expires (i.e. is not extended), it is moved to the special directory

/pfs/10/work/.removed.

Expired workspaces can be restored for a keep time of 14 days.

After this period, expired workspaces are moved to a special location in the project filesystem.

After at least six months, a workspace is considered abandoned and is permanently deleted.

In addition to the standard workspace tools, we provide the script

ws_shelve for copying a workspace to a project directory.

ws_shelve takes two arguments:

- the name of the workspace to copy

- the acronym of your project

This command creates and submits a Slurm job that uses rsync to copy the workspace

from /pfs/10/work to /pfs/10/project.

A new directory is created with a timestamp suffix to prevent name collisions.

# Copy the workspace /pfs/10/work/tu_xyz01-test to /pfs/10/project/bw10a001

$ ws_shelve test bw10a001

Workspace shelving submitted.

Job ID: 2058525

Logs will be written to:

/pfs/10/project/bw10a001/.ws_shelve/tu_xyz01/logsIn this example, the workspace is copied to

/pfs/10/project/bw10a001/tu_xyz01/test-<timestamp>.

SDS@hd

SDS@hd is mounted via NFS on login and compute nodes at /mnt/sds-hd.

To access your Speichervorhaben, the export to BinAC 2 must first be enabled by the SDS@hd-Team. Please contact SDS@hd support and provide the acronym of your Speichervorhaben, along with a request to enable the export to BinAC 2.

Once this has been done, you can access your Speichervorhaben as described in the SDS documentation.

$ kinit $USER

Password for <user>@BWSERVICES.UNI-HEIDELBERG.DE:The Kerberos ticket store is shared across all nodes. Creating a single ticket is sufficient to access your Speichervorhaben on all nodes.

More Details on the Lustre File System

Lustre is a distributed parallel file system.

- The entire logical volume as presented to the user is formed by multiple physical or local drives. Data is distributed over more than one physical or logical volume/hard drive, single files can be larger than the capacity of a single hard drive.

- The file system can be mounted from all nodes ("clients") in parallel at the same time for reading and writing. This also means that technically you can write to the same file from two different compute nodes! Usually, this will create an unpredictable mess! Never ever do this unless you know exactly what you are doing!

- On a single server or client, the bandwidth of multiple network interfaces can be aggregated to increase the throughput ("multi-rail").

Lustre works by chopping files into many small parts ("stripes", file objects) which are then stored on the object storage servers. The information which part of the file is stored where on which object storage server, when it was changed last etc. and the entire directory structure is stored on the metadata servers. Think of the entries on the metadata server as being pointers pointing to the actual file objects on the object storage servers. A Lustre file system can consist of many metadata servers (MDS) and object storage servers (OSS). Each MDS or OSS can again hold one or more so-called object storage targets (OST) or metadata targets (MDT) which can e.g. be simply multiple hard drives. The capacity of a Lustre file system can hence be easily scaled by adding more servers.

Useful Lustre Comamnds

Commands specific to the Lustre file system are divided into user commands (lfs ...) and administrative commands (lctl ...). On BinAC2, users may only execute user commands, and also not all of them.

lfs help <command>: Print built-in help for command; Alternative:man lfs <command>lfs find: Drop-in replacement for thefindcommand, much faster on Lustre filesystems as it directly talks to the metadata severlfs --list-commands: Print a list of available commands

Moving data between WORK and PROJECT

!! IMPORTANT !! Calling mv on files will not physically move them between the fast and the slow pool of the file system. Instead, the file metadata, i.e. the path to the file in the directory tree will be modified (i.e. data stored on the MDS). The stripes of the file on the OSS, however, will remain exactly were they were. The only result will be the confusing situation that you now have metadata entries under /pfs/10/project that still point to WORK OSTs. This may sound confusing at first. When using mv on the same file system, Lustre only renames the files and makes them available from a different path. The pointers to the file objects on the OSS stay identical. This will only change if you either create a copy of the file at a different path (with cp or rsync, e.g.) or if you explicitly instruct Lustre to move the actual file objects to another storage location, e.g. another pool of the same file system.

Proper ways of moving data between the pools

- Copy the data - which will create new files -, then delete the old files. Example:

$> cp -r /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. $> rm -rf /pfs/10/work/tu_abcde01-my-precious-ws/* $> ws_release --delete-data my-precious-ws

- Alternative to copy: use

rsyncto copy data between the workspace and the project directories. Example:

$> rsync -av /pfs/10/work/tu_abcde01-my-precious-ws/simulation/output /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/simulation25/ $> rm -rf /pfs/10/work/tu_abcde01-my-precious-ws/* $> ws_release --delete-data my-precious-ws

- If there are many subfolders with similar size, you can use

xargsto copy them in parallel:

$> find . -maxdepth 1 -mindepth 1 -type d -print | xargs -P4 -I{} rsync -aHAXW --inplace --update {} /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/simulation25/

will launch four parallel rsync processes at a time, each will copy one of the subdirectories.

- First move the metadata with

mv, then uselfs migrateor the wrapperlfs_migrateto actually migrate the file stripes. This is also a possible resolution if you alreadymved data fromworktoprojector vice versa.lfs migrateis the raw lustre command. It can only operate on one file at a time, but offers access to all options.lfs_migrateis a versatile wrapper script that can work on single files or recursively on entire directories. If available, it will try to uselfs migrate, otherwise it will fall back torsync(seelfs_migrate --helpfor all options.)

Example with lfs migrate:

$> mv /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. $> cd /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. $> lfs find . -type f --pool work -0 | xargs -0 lfs migrate --pool project # find all files whose file objects are on the work pool and migrate the objects to the project pool $> ws_release --delete-data my-precious-ws

Example with lfs_migrate:

$> mv /pfs/10/work/tu_abcde01-my-precious-ws/* /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. $> cd /pfs/10/project/bw10a001/tu_abcde01/my-precious-research/. $> lfs_migrate --yes -q -p project * # migrate all file objects in the current directory to the project pool, be quiet (-q) and do not ask for confirmation (--yes) $> ws_release --delete-data my-precious-ws

Both migration commands can also be combined with options to restripe the files during migration, i.e. you can also change the number of OSTs the file is striped over, the size of a single strip etc.

Attention! Both lfs migrate and lfs_migrate will not change the path of the file(s), you must also mv them! If used without mv, the files will still belong to the workspace although their file object stripes are now on the project pool and a subsequent rm in the workspace will wipe them.

All of the above procedures may take a considerable amount of time depending on the amount of data, so it might be advisable to execute them in a terminal multiplexer like screen or tmux or wrap them into small SLURM jobs with sbatch --wrap="<command>".

Question:

I totally lost overview, how do i find out where my files are located?

Answer:

- Use

lfs findto find files on a specific pool. Example:

$> lfs find . --pool project # recursively find all files in the current directory whose file objects are on the "project" pool

- Use

lfs getstripeto query the striping pattern and the pool (also works recursively if called with a directory). Example:

$> lfs getstripe parameter.h

parameter.h

lmm_stripe_count: 1

lmm_stripe_size: 1048576

lmm_pattern: raid0

lmm_layout_gen: 1

lmm_stripe_offset: 44

lmm_pool: project

obdidx objid objid group

44 7991938 0x79f282 0xd80000400

shows that the file is striped over OST 44 (obdidx) which belongs to pool project (lmm_pool).

Why pathes and storage pools should match:

There are four different possible scenarios with two subdirectories and two pools:

- File path in

/pfs/10/work, file objects on poolwork: good. - File path in

/pfs/10/project, file objects on poolproject: good. - File path in

/pfs/10/project, file objects on poolwork: bad. This will "leak" storage from the fast pool, making it unavailable for workspaces. - File path in

/pfs/10/work, file objects on poolproject: bad. Access will be slow, and if (volatile) workspaces are purged, data residing onprojectwill (voluntarily or involuntarily) be deleted.

The latter two situations may arise from mving data between workspaces and project folders.

More on data striping and how to influence it

!! The default striping patterns on BinAC2 are set for good reasons and should not light-heartedly be changed!

Doing so wrongly will in the best case only hurt your performance.

In the worst case, it will also hurt all other users and endanger the stability of the cluster.

Please talk to the admins first if you think that you need a non-default pattern.

- Reading striping patterns with

lfs getstripe - Setting striping patterns with

lfs setstripefor new files and directories - Restriping files with

lfs migrate - Progressive File Layout

Architecture of BinAC2's Lustre File System

Metadata Servers:

- 2 metadata servers

- 1 MDT per server

- MDT Capacity: 31TB, hardware RAID6 on NVMe drives (flash memory/SSD)

- Networking: 2x 100 GbE, 2x HDR-100 InfiniBand

Object storage servers:

- 8 object storage servers

- 2 fast OSTs per server

- 70 TB per OST, software RAID (raid-z2, 10+2 reduncancy)

- NVMe drives, directly attached to the PCIe bus

- 8 slow OSTs per server

- 143 TB per OST, hardware RAID (RAID6, 8+2 redundancy)

- externally attached via SAS

- Networking: 2x 100 GbE, 2x HDR-100 InfiniBand

- All fast OSTs are assigned to the pool

work - All slow OSTs are assigned to the pool

project - All files that are created under

/pfs/10/workare by default stored on the fast pool - All files that are created under

/pfs/10/projectare by default stored on the slow pool - Metadata is distributed over both MDTs. All subdirectories of a directory (workspace or project folder) are typically on the same MDT. Directory striping/placement on MDTs can not be influenced by users.

- Default OST striping: Stripes have size 1 MiB. Files are striped over one OST if possible, i.e. all stripes of a file are on the same OST. New files are created on the most empty OST.

Internally, the slow and the fast pool belong to the same Lustre file system and namespace.

More reading: