BwUniCluster3.0/Data Migration Guide: Difference between revisions

| Line 20: | Line 20: | ||

'''Policy'''<br> |

'''Policy'''<br> |

||

* '''New Quotas:''' For HOME, the storage quota is 450GB and 4.5 million files. |

* '''New Quotas:''' For HOME, the storage quota is ''''450GB''' and '''4.5 million files'''. |

||

* '''Username for KIT-users:''' |

* '''Username for KIT-users:''' |

||

* '''Access with KIT Student Account:''' |

* '''Access with KIT Student Account:''' |

||

Revision as of 15:32, 6 February 2025

Summary of changes

bwUniCluster 3.0 is located on the North Campus to meet the requirements of energy efficient and environmentally friendly HPC operation by using the hot water cooling available there. bwUniCluster 3.0 has a new file system. The most important changes compared to bwUniCluster 2.0 are listed below.

The new hostname for login via Secure Shell (SSH) is:

uc3.scc.kit.edu

Hardware

The new bwUniCluster 3.0 features more than 340 CPU nodes and 28 GPU nodes. Most of the CPU nodes originate from the bwUniCluster 2.0 Extension and are equipped with the well-known Intel Xeon Platinum 8358 processors (Ice Lake) with 64 cores per dual socket node. The new CPU partition consists of 70 AMD EPYC 9454 nodes with 96 cores per dual socket node. The GPU nodes feature A100 and H100 accelerators by NVIDIA, one node is a MI300A APU by AMD.

The node interconnect for the new partitions is InfiniBand 2x/4x NDR200, which is expected to provide even better parallel performance. For details please refer to Hardware and Architecture.

Software

The operating system on each node is Red Hat Enterprise Linux (RHEL) 9.4.

Operations

There are no more dedicated single node job queues anymore. Compute resource hence can be allocated with a minimum of one CPU core or one GPU, regardless of the hardware partition. For details please refer to Queues on bwUniCluster 3.0.

Policy

- New Quotas: For HOME, the storage quota is '450GB and 4.5 million files.

- Username for KIT-users:

- Access with KIT Student Account:

Data Migration

bwUniCluster 3.0 features a completely new file system, there is no automatic migration of user data! Users have to actively migrate the data to the new file system. During a limited duration of time (approx. 3 months after commissioning), however, the old file system remains in operation and is mounted on the new system. This makes the data transfer relatively quick and easy. Please be aware of the new, slightly more stringent quota policies! Before the data can be copied, the new quotas must be checked to see if they are sufficient to accept the old data. If there are any quota issues, users should take a look at their respective data lifecycle management.

Assisted data migration

To facilitate the transfer of data between the old and new HOME directories and workspaces, we provide a script that guides you through the copy process or even automates the transfer:

migrate_data_uc2_uc3.sh

In order to mitigate the effects of the quota changes, the script first performs a quota check. If a quota check detects that the storage capacity or the number of inodes has been exceeded, the program terminates with an error message.

If the quota check was without objection, the data migration command is displayed, not executed! For the fearless, the -x flag can even be used to initiate the copy process itself.

The script can automate the data transfer to the new HOME directory. If you intend to also transfer data resident in workspaces, the script can automate this, too. However, the target workspaces on the new system first have to be setup manually (cf. Migration of Workspaces).

Options of the script

-hprovides detailed information about its usage-vprovides verbose output including the quota checks-xwill execute the migration, if the quota checks did not fail

If the data migration fails due to time limit or if you do not intend to do the data transfer interactively, the help message (-h) provides an example on how to do the data transfer via a batch job. This even accelerates the copy process due to the exclusive usage of a compute node. Alternatively, rsync can be run repeatedly, as it performs incremental synchronizations. This means that data that has already been copied will not be copied a second time, and only files that do not exist in the target directory will be copied.

Attention

We explicitly want the users to NOT migrate their old dot files and dot directories, which possibly contain settings not compatible with the new system (.bashrc, .config/, ...). The script therefore excludes these files from migration. We recommend that you start with a new set of default configuration files and adapt them to your needs as required. Please compare section Migration of Software and settings.

Examples

- Getting the help text

migrate_data_uc2_uc3.sh -hmigrate_data_uc2_uc3.sh [-h|--help] [-d|--debug] [-x|--execute] [-f|--force] [-v|--verbose] [-w|--workspace <name>]

Without options this script will print the recommended rsync command which can be used to copy data

from the home directory of bwUniCluster 2.0 to bwUniCluster3.0. You can either select different

rsync options (see "man rsync" for explanations) or start the script again with the option "-x"

in oder to execute the rsync command. Note that the recommended options exclude files and directories

on the old home directory path which start with a dot, for example ''.bashrc''. This is done because

these files and directories typically include configuration and cache data which is probably different

on the new system. If these dot files and directories include data which is still needed you should

migrate it manually.

The script can also be used to migrate the data of a workspace, see option "-w". Here the option

"-x" is only alloed if the old and the new workspace has the same name. If you want to modify the

name of the old workspace just use the printed rsync command and select an appropriate target directory.

Note that you have to create the new workspace beforehand.

You should start the script inside a batch job since limits on the login node will otherwise probably

abort the actions and since the login node will otherwise be overloaded.

Example for starting the batch job:

sbatch -p cpu -N 1 -t 24:00:00 --mem=30gb /pfs/data6/scripts/migrate_data_uc2_uc3.sh -x

Options:

-d|--debug Provide debug messages.

-f|--force Continue if capacity or inode usage on old file system are higher than

quota limits on new file system.

-h|--help This help.

-x|--execute Execute rsync command. If this option is not set only print rsync command to terminal.

-v|--verbose Provide verbose messages.

-w|--workspace <name> Do rsync for the workspace <name>. If this option is not set do it for your home directory.

- Give verbose hints (quota OK)

migrate_data_uc2_uc3.sh -vDoing the actions for the home directoy. Checking if capacity and inode usage on the old home file system is lower than the limits on the new file system. ✅ Quota checks for capacity and inode usage of the home directoy have passed. Recommended command line for the rsync command: rsync -x --numeric-ids -S -rlptoD -H -A --exclude='/.*' /pfs/data5/home/kit/scc/ab1234/ /home/ka/ka_scc/ka_ab1234/

- Give verbose hints (quota not OK)

migrate_data_uc2_uc3.sh -vDoing the actions for the home directoy. Checking if capacity and inode usage on the old home file system is lower than the limits on the new file system. ❌ Exiting because old capacity usage (563281380) is higher than new capacity limit (524288000). Please remove data of your old home directory (/pfs/data5/home/kit/scc/ab1234). You can also use the force option if you believe that the new limit is sufficient.

Migration of HOME

If guided migration fails due to quota issues, users will need to reduce the number of inodes or the amount of data. A manual check of the used resources is helpful, which is described below.

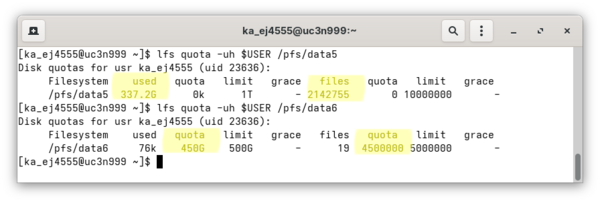

1. Check the quota of HOME

Show user quota of the old HOME:

$ lfs quota -uh $USER /pfs/data5

Show user quota of the new HOME:

$ lfs quota -uh $USER /pfs/data6

For the new file system, the limit for capacity and inodes must be higher than the capacity and the number of inodes used in the old file system in order to avoid I/O errors during data transfer. Pay attention to the respective used, files, and quota column of the outputs.

2. Cleanup

If the capacity limit or the maximum number of inodes is exceeded, now is the right time to clean up.

Either delete data in the source directory before the rsync command or use additional --exclude statements during rsync.

Hint:

If the inode limit is exceeded, you should, for example, delete all existing Python virtual environments, which often contain a massive number of small files and which are not functional on the new system anyway.

3. Migrate the data

The easiest way to get a suitable rsync command that fits your needs is to use the output of migrate_data_uc2_uc3.sh and eventually adding further --exclude statements.

Migration of Workspaces

Show user quota of the old workspaces:

$ lfs quota -uh $USER /pfs/work7

Show user quota of the new workspaces:

$ lfs quota -uh $USER /pfs/work9

If the quota checks are successful, then an appropriate workspace needs to be created and the data transfer can be initiated:

1. Create a new workspace with the same name as the old one, e.g. ws_allocate demospace 10

2. Transfer the data using the recommended command:

migrate_data_uc2_uc3.sh --workspace demospace -vDoing the actions for workspace "demospace". Found old workspace path (/pfs/work7/workspace/scratch/ej4555-demospace/). Found new workspace path (/pfs/work9/workspace/scratch/ka_ej4555-demospace/). Recommended command line for the rsync command line: rsync -x --numeric-ids -S -rlptoD -H -A --stats /pfs/work7/workspace/scratch/ej4555-demospace/ /pfs/work9/workspace/scratch/ka_ej4555-demospace/

Migration of Software and Settings

We explicitly and intentionally exclude all dot files and dot directories (.bashrc, .config/, ...) from above data migration helper script. Our users should NOT migrate their old dot files and dot directories, which possibly contain settings not compatible with the new system. We recommend that you start with a new set of default configuration files and adapt them to your needs as required.

Settings and Configurations

The change to the new system will probably be most noticeable if the behavior of the Bash shell has been customized.

Please consult your old .bashrc file and copy aliases, bash functions or other settings you have defined there to the new .bashrc file.

Do not simply copy the old .bashrc file. Avoid moving settings that were made by conda in .bashrc (cf. Conda Installation).

Python environments

Virtual Python environments such as venvs or conda environments should NOT be migrated to the new systems. For one reason, it is very likely that the virtual environment will not be functional on the new system. Also, these environments usually contain a large number of small files for which any data movement on the parallel file system will provide only mediocre performance.

Fortunately, reinstalling or setting up Python environments is relatively easy if, for example, the use of requirements.txt is consistently followed. Please refer to the Best Practice guidelines for the handling and usage of Python.

User software

Application software that you have not compiled yourself must be reinstalled; the relevant installation file may be required for this.

Self-compiled software must be recompiled from the sources and installed in the HOME directory.

Containers

Containers such as Apptainer or Enroot can either be exported to an image or freshly downloaded and set up (cf. Exporting and transfering containers).